Swire wore a tie with his usual denim, a concession for today’s big meeting. His rugged, scruffy look always said “I work undercover, so eat yer heart out.” Field agents had an escape clause from the FBI’s prim sartorial reputation. Lorenzo Lessig, on the other hand, looked dapper, even professorial, using his briefcase as a seat to protect the rayon of his suit from rough concrete. He stood up, brushing away nonexistent crumbs, then offered me his arm in a courtly, latin manner. I turned it into a manly handshake. A thing we do.

“You didn’t save me any?”

I glanced with a moue toward the crushed and unpromising wrappers.

“Didn’t you just have lunch with your father?” Swire’s headshake rattled a ponytail that might once have been dirty blond, though now it seemed more dirty, with fading hints that presaged early gray.

“Ancient history. Ten minutes ago. Next time, bring me something anyway.”

“Pregnancy, God’s back door to gluttony.”

“That’s not even clever.”

He shrugged. Lessig grinned. “Well I think it is wondrous. And I truly must thank you, Isabel, for giving me the password to view life’s miracle.”

Born in Tampa to a New York retiree and a nurse from Trinidad, he truly had no excuse for putting on these latin airs. But Lorenzo wore the role well. Also, he spent more time undercover than Pete did.

“To view life’s... Oh yeah. The womb cam. Sometimes I forget it’s in there.”

He smiled. Perfect teeth, aquiline nose and dark complexion. “I think perhaps you tell a lie, Isabel. I will wager that you check developments, many times each day. I know that I would, were I you.”

Involuntary blush response. Find a distraction. I spotted one out the corner of my eye.

“Cheez-it, guys. The fuzz.”

They glanced around and saw the same cluster of movement -- half a dozen men and women plus two ambis clustered at the curb, where heavyset drivers in black sunglasses turned to drive away official-looking SUVs after unloading very important cargo. Ascending the broad steps, all of the former passengers were attired in Washington take-me-very-seriously suits. Only a trained eye could tell that the jackets were made of new, bullet-resisting nano-weave. Any conversation was murmured and innocuous. These days, you simply did not discuss business out of doors.

“Deputy fuzz, you mean,” Pete commented. “We all better go in, too, or Her Nibs will assign us to auditing pot dispensaries in Alabama.”

Her Nibs -- Deputy Director Molly Ringwreath Rogers -- glanced briefly my way as she passed with her entourage. A guarded expression crossed her sharply scupted face as she gave the briefest nod, before resuming her upward stride without interruption. Athletic. I admired how high she could lift those knees. My own clamber felt awkward, crablike, by comparison. Though I shrugged off Lessig’s gallant hand off my arm. Not yet, Lorenzo. I’ll manage alone, for now.

Others were converging for the big meeting. Agents, researchers, lawyers and administrators, passing through the great doors and across a broad, polished FBI seal, inlaid across the atrium floor.

“I’ll go and save us some seats,” Pete said, before hurrying ahead. I couldn’t blame him. In fact, it was probably the right thing to do... though it meant that he missed the grand, surprise entrance that folks would be talking about for... well, forever.

Lorenzo and I entered ___ Auditorium almost last, lurking at the back and looking for Swire. Most attendees were already seated as the Deputy Director and her chief aides took to plush chairs, onstage to the far left, leaving plenty of room for today’s speakers. I spotted Pete, waving at us with two empty spaces -- one on the aisle for me. I started to nudge Lessig --

-- when a hand squeezed gently on my shoulder and a rather deep, resonant voice asked: “Would you pardon me, Miss?”

Tall, square of jaw, with peppered hair slicked in a distinguished cut, the newcomer wore an expensively tailored, dark blue suit with an American flag pin and red silk tie. His gaze swiftly encompassed my condition.

“Sorry. I mean, pardon me, Madam.”

“Sure,” I answered, shuffling closer to Lorenzo, wondering. Who makes such distinctions, anymore, because of pregnancy?

With my bulk no longer obstructing his path, the tall man murmured a low thankyou and swept on past with a determined air. He looked familiar. As if I really ought to recognize...

I wasn’t alone in that reaction. Heads turned as elbows jabbed ribs and a wave of sudden silence followed the newcomer, spreading rapidly as he strode down a long aisle toward the front of the hall.

For once, Molly Rogers was slow on the uptake. It took an urgent whisper from her assistant for realization to dawn.

Hurriedly standing, Rogers stepped forward even as the tall man made short work of eight steps leading to the stage, taking them two at a time.

We could all hear every word.

“Senator. My... what an... unexpected honor.”

His smile. Later image analysis would reveal tension mixed with eager anticipation that had the taut skin of his cheek throbbing an eleven hertz beat. At the time, from my great distance away, his grin appeared suddenly both familiar and ingratiating. Confident and absolutely determined.

“It’s Sean fucking McDean!” Swire said, and not just him. The same words skittered around us. Well, pretty much the same. At least the McDean part. Is there an echo in here?

“No shit?” I was sarcastic, which won a glance of mild disapproval from Lorenzo.

“Good afternoon, Deputy Director,” the senior Senator from Delaware said, loudly enough for all to discern, even without the amp plugs that many agents were now pushing into their ears. “I am so sorry to be causing a disruption.”

“Well... sir...” Molly Rogers looked nonplussed. “Is there something we can do for you, Senator? We were about to convene an important meeting --”

“About the Big Deal. Yes. Very consequential, Madam Deputy Director. Even momentous. Still, I feel obliged and compelled to do something impudent. Something shocking and yet that’s urgent for the sake of our republic. May I hijack your meeting and your audience for just five minutes? I promise, on my honor and on my very soul that you will all find it both interesting and worth the time.”

Still rather stunned, Rogers started to stammer a weak objection, but found herself with no one to talk to, as McDean turned and strode, in three lanky steps, to the nearby podium. From a jacket pocket he pulled out his slim pen-phone and laid it into the lectern’s regular receptacle, turning the pen into a microphone. At once, his voice filled the hall.

“Ladies and gentlemen of the FBI, those of you both present and tuning in from afar. Thank you for your kind indulgence and flexibility in allowing me a few brief moments of your valuable time. I’ll let you get back to your scheduled, portentous topic shortly. But first, let me promise this. What I have to say right now will top anything you expected to witness today!”

He didn’t bother introducing himself, I noted. Of course, Sean McDean was moderately well-known, a mid-to-upper ranked senator and committee chairman -- though which committee escaped me. I saw agents and techies nearby and all across the hall whip out their phones and pull open scroll screens, or else slip on GuGlasses in order to start glomming overlay data, adding realtime info-gloss as the senator spoke. Both Lorenzo and Pete did that, but I preferred letting it all wash over me, unadorned.

“I come before you to proclaim and accuse -- as Emile Zola did more than a century ago -- that a terrible crime is taking place! A conspiracy against the United States of America and against the very possibility of open, democratic government around this increasingly vexed world of ours.”

Ah. I realized -- or briefly thought I did -- what he had come to talk about. The thing on everybody’s lips -- the Big Deal -- a world treaty whose legal implications, especially for the FBI, were supposed to be today’s topic. Our scheduled speakers -- one each from Justice, State and Quantico, along with a professor from Georgetown -- sat in the front row. Pre-empted but as fascinated as anybody.

“First though,” McDean lifted a hand. “I must ask a question.” He leaned toward us.

“Are any of you presently aware of major scandals that involve me?”

The non-sequitur made me blink in surprise. I could tell that it rocked back several of those around me.

“Not minor stuff!” he continued hurriedly. “None of the usual complaints about this or that misjudged or badly reported campaign contribution. Or rumors that I fudged a grade while at Princeton. Or tales that my son got favors in his bid for that defense contract. Forget about the usual pile of gritty stuff that any politician compiles after thirty years of public service. Mostly baloney but maybe some minor or intermediate sins to atone for... with most of it by now pretty familiar and chewed over by the press. Putting all of that aside...

“...please raise your hand if you are aware of something really, really big that’s about to pop, concerning Senator Sean McDean!”

He paused, and was not the only one turning to scan the audience. All across the hall, heads rotated. We all looked around. No hands went up.

“Now I know that’s not a perfect test,” he continued, voice quavering a little, on a harmonic that denoted tension, blended with tenacity. “Tomorrow, possibly even as soon as I finish up here, some of you will say that you were aware of such a scandal brewing, but could not raise your hand because of legal protocol, or confidentiality, or proper procedure or some similar, lame excuse. When these colleagues speak, note who they are! It’s important. And I’ll tell you why.

“You see, I am being blackmailed.”

Senator McDean allowed that to sink in. The hall was dead silent.

“I was recently shown ‘evidence’ of something awful.”

He did not lift hands to gesture quotation marks, but his voice put them there.

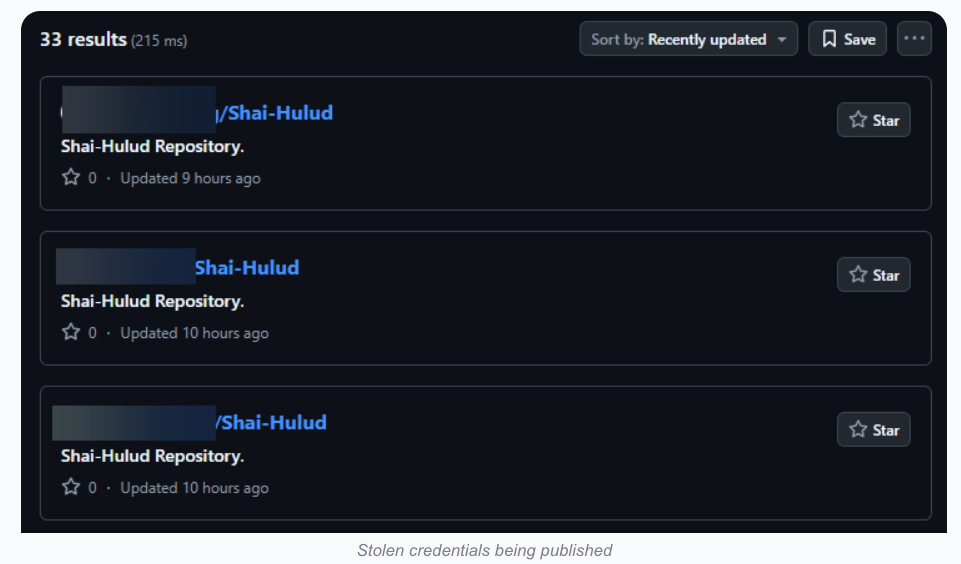

“Evidence that was concocted using vividly realistic modern methods, even more advanced than those currently used in Hollywood. I was told that these horrid materials would be revealed to both authorities and the public, if I didn’t comply. Help pass or modify certain bills. Block others from becoming law. The choice I was offered was simple. Become their lap-dog, their wholly-owned U.S. Senator... or else face ruin.”

Now, silence gave way to a low murmur. Heads turned and whispers were exchanged. I glimpsed Lorenzo, wearing heavy GuGoggles, use his fingers to pluck at thin air and flick something invisible to the bare-eyed -- something virtual -- past me over to Pete. A link he must have found, online. Pete waved it away and took off his own pair of specs, joining me in the much more fascinating real moment.

“I strung the conspirators along for as long as I could,” McDean continued. “Pretending to play along. I truly was at a loss, you see. Would people believe the nasty, so-called evidence that had been concocted about me? Was my life of service at an end? I confess that -- to my everlasting shame -- the temptation to cave-in, though nauseating, did occur to me. I felt trapped. The possibility of prison or public humiliation can break some men...

“...or else anger can steel the mind!

“And so, I got past my moment of weakness. Discretely, I did some research. and came to a stark, horrified realization.

“I am not the only one!”

Senator McDean gripped the edges of the lectern so hard that I heard the wood audibly complain with a faint crack.

“Let me ask you all something,” he said in a voice suddenly gone both tense and hushed. “Have you ever stopped to wonder why our politics started getting so weird, about a generation ago? I’m not just talking about the cable, web and mesh hate-jockies who keep dividing the people into ever smaller classes of mutual resentment, suckling on the teat of indignant resentment. Nor do I mean the tsunamis of cash that flood through this town, both overt and covert. Indeed, the Big Deal is supposed to partly resolve or reduce that part of things. We can hope. But don’t hold your breath.

“No, by weird, I’m talking about the way some politicians, leaders, civil servants and other figures of importance keep saying one thing and then doing another. They claim to maintain consistency... adherence to a steady philosophy and agenda. Yet, whatever they touch actually winds up heading in a different direction! Social conservatives who claim to be vigorously “pro-life” or anti-gay, but who never deliver anything real and always seem to sabotage their side with some ill-chosen words. Did you ever wonder how they could be so stupid? Or negotiators wrangling new deals for health care or the environment... who somehow leave in place a loophole that lets frackers and frokkers and big pharma companies free to do whatever they please?

“That’s the sort of thing my blackmailers wanted me to start doing! Maintain my public pose as a fighting reformer! But effectively make sure their subtle agenda kept moving forward! And I realized, it would kill me. I would die inside, if I went along.

“So I looked around.

“Hey, you all know I had a background in computer tech, before seeking public office. I dusted off some of those skills and did a pretty darned sophisticated statistical analysis, based on existing studies of cause and outcome here in Washington. And what do you know? I found clear signs!”

He leaned forward, intensity in his eyes. “There are hundreds of cases... maybe more! And that’s when I started putting it all together.

“While we were all obsessed trying to pass legislation to reduce the poisonous effects of money in politics -- from campaign contributions to outright bribery -- we forgot that blackmail is more powerful than other forms of corruption. If you bribe an official, he may then say “that’s enough for this year.” She may be satiable. There will be limits to how far they’ll sell out their principles.

“But envision this. What if you have pictures of an under-secretary with a donkey?”

That roused titters of nervous laughter, especially from prudish Lorenzo.

“Or a congressman caught with -- what’s the expression? With a live boy or a dead girl? Suppose you have evidence that can send a major official to prison?

“Do you actually send him to prison? Or do you use it for leverage. Make him work for you, forever?

“That’s probably how it all started. Take some starry eyed idealist determined to clean up this town... a freshman congressman or a brilliant administrative appointee. Invite him or her to a high-class party on a yacht. Separate him from the ones who keep him steady or who provide wisdom in his life. Maybe slip him some drugs or cater to a brief-bad impulse, snap some incriminating pictures, and you’ve got him in your pocket!

“Realizing this, I looked back at the number of times that I must’ve almost fallen for that kind of trap. In fact, as many of you know, I did fall once, many years ago, back when I was in the State Assembly! Though it was a simple, clean, consensual lapse, it still makes me twinge with shame. Only the forgiveness of a good woman -- and the people of my district -- let me put that episode behind us and -- with God’s help -- I’ve been a straight arrow, ever since.”

The Senator shook his head and suddenly veered in tone. I half jumped out of my seat when he pounded the lectern. Bang-echoes bounced around the auditorium.

“That’s why they resorted to faked evidence, using fantastic tools of image processing, so good that...”

McDean stopped, perhaps realizing how whiney he was starting to sound. Petulant and self-pitying. So he stood up straight. Letting go of the lectern, he took a couple of breaths, then resumed in a deeper timbre of flat determination.

“... fakery that’s so masterful, I hold out zero hope that my denials will be believed. I am resigned to facing a firestorm. Denunciations in the press. Repudiations by my colleagues. The curses of betrayed constituents.... And then there’s my faithful and beloved wife, who will endure hell standing by my side --”

His voice cracked at that point. Looking down at his hands. And I felt awed.

Either he is one hell of an actor... or else psychotic... or the bravest man I ever saw.

Silence ensued. It bore on and on. Mere seconds that felt much longer, till Sean McDean finally lifted his gaze to sweep the room, steely-eyed.

“So why am I here? What reason could I possibly have to hector you fine, skilled professionals with this sad tale? The answer is simple.

“You see, I know my career is toast. I have just now sacrificed it, rather than succumb to evil plotters and become their tool. Their toy. But I don’t matter. Let me say that again. I don’t matter at all!

“I’ve come here today, spilling my guts and proclaiming the likelihood -- though I cannot prove it -- of a terrible conspiracy. Or maybe it’s being done by several different groups. My analysis was pretty crude and subjective. But I brought my accusation here, because you, here in this room, may be America’s last, best hope. Because, if I’m right, our republic is being suborned, and has been for a long time. And the plotters by now have inveigled their way through all the paths and portals and gears of power, taking control over the greatest nation on Earth.

“I came here today in order to spring their trap upon myself, before your very eyes, daring them to do their worst, and hoping that you --” he pointed into the audience, somewhere on the left side. “-- or you --” he pointed again. “Or you, or you, will be stirred to investigate all this, perhaps out of curiosity or patriotism or both, despite whatever your superiors tell you! Because some FBI officials may have good reasons to divert you from this matter. And others may be among the suborned... but they can’t get to all of you!”

Turning left and right, I saw a great many faces transfixed. Captivated. So -- apparently -- was Deputy Director Molly Ringwreath Rogers, who sat staring at the Senator, a look on her face that combined amazement and fascination with... could it be admiration? Was she actually swallowing this fantastic story? I saw her hand go to her ear, listening to something being said by a speaker bud. Muscles tensed along her throat and jaw as she subvocalized a reply, sensed by the pretty -- and functional -- hematite necklace that she wore. The sole accessorized adornment of her severe skirt-suit.

“I came here...” McDean continued, in a tone I recognized. That of an experienced stump speaker, cranking up the drama toward a big, concluding climax. “I came to ask some of you -- as many as may accept the challenge, the risk, the duty -- to investigate the charges that I’ve raised today! Find proof. Uncover the conspiracy! Reveal this plot and pillory the bastards in the harsh light of truth.”

McDean spread his arms.

“But there is another group I’m appealing to right now. Folks who aren’t in this room, but who will doubtless see the recordings later, as they splash and slosh around the world.

“I’m talking about... I am talking to... all you other blackmail victims out there. Men and women who now find yourself mired in a snare of threats and despair.

“Perhaps you thought you were the only one. Or among just a few trapped souls. You may even have joined the conspirators by now, rationalizing that their goals are somehow right, as a way to escape self-loathing. A psychological retreat -- your own, personal Stockholm Syndrome.

“Still, in your heart, you know it’s wrong. And beneath it all, you felt helpless, alone... so terribly alone!

“But let me tell you now -- you aren’t alone! Moreover, there is still redemption, it can be yours!

“Just follow my example. Stand up for your country. Find a way to turn the tables. Denounce the sons of bitches and take the resulting heat bravely.

“Who knows, there may be rewards beyond reckoning, for the first few to come forward! Whistleblower prizes? Book deals? Even forgiveness for whatever drove you to desperate submission. Especially if you’re among the first to step up.

“The biggest reward of all? The wondrous feeling that will come with release from your prison! From doing the right thing at last.

“You don’t believe it works that way?

“Look at me!”

At that point, Senator McDean surprised us all by smiling. By grinning.

“I am about to be ruined, yet I have done my duty ... and I am the happiest man right now on the face of this Earth.”

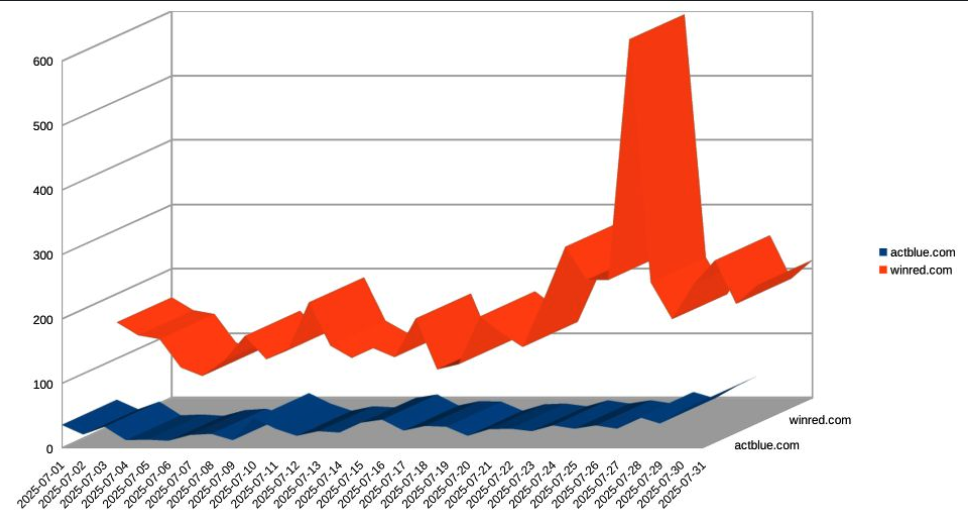

Start of fourth quarter of the year. The end of year is feeling close!

Start of fourth quarter of the year. The end of year is feeling close!

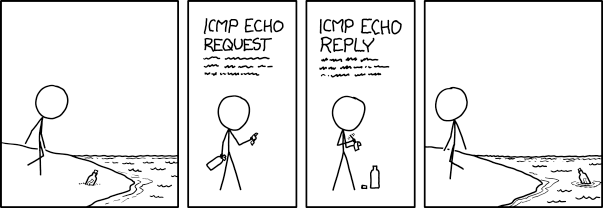

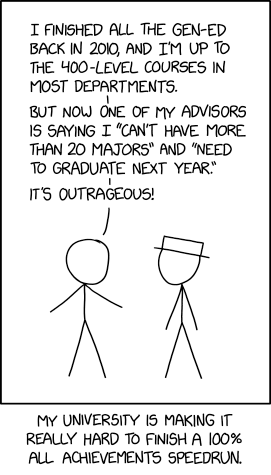

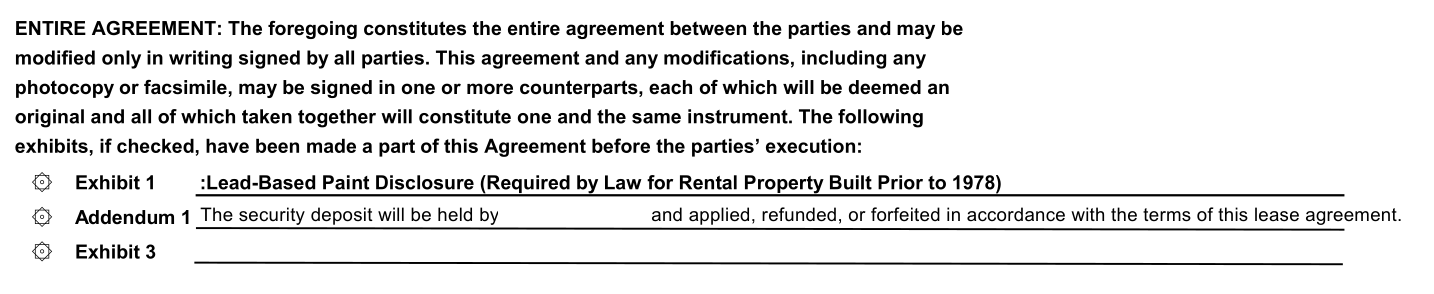

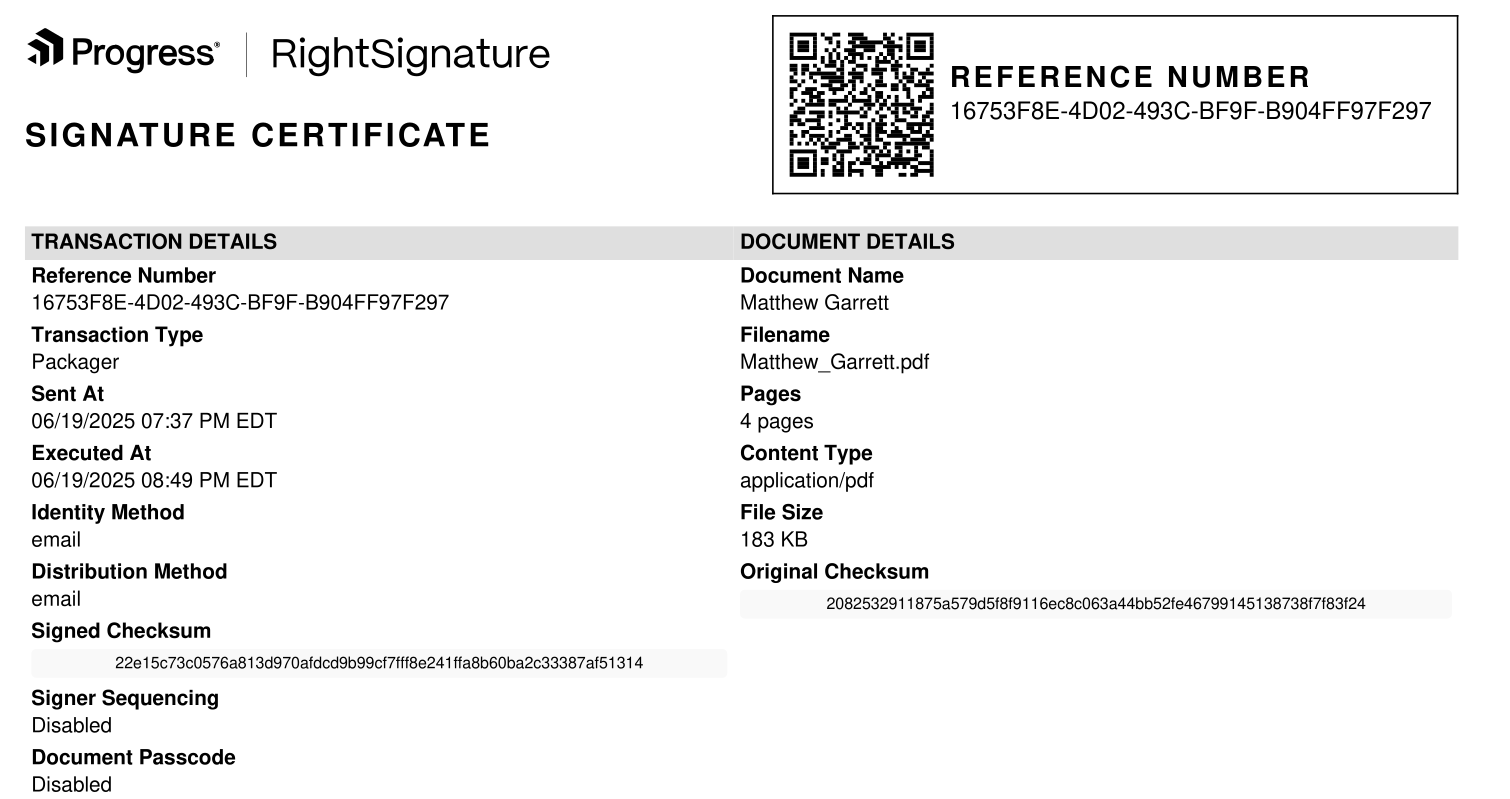

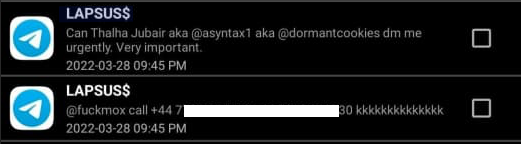

I had to rent a house for a couple of months recently, which is long enough in California that it pushes you into proper tenant protection law. As landlords tend to do, they failed to return my security deposit within the 21 days

I had to rent a house for a couple of months recently, which is long enough in California that it pushes you into proper tenant protection law. As landlords tend to do, they failed to return my security deposit within the 21 days

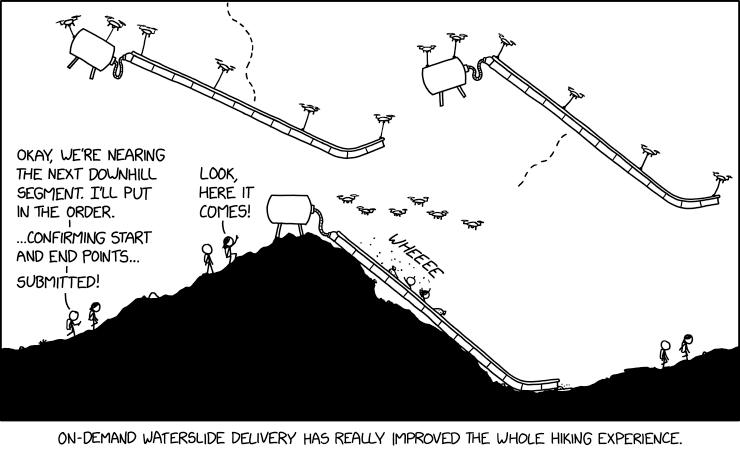

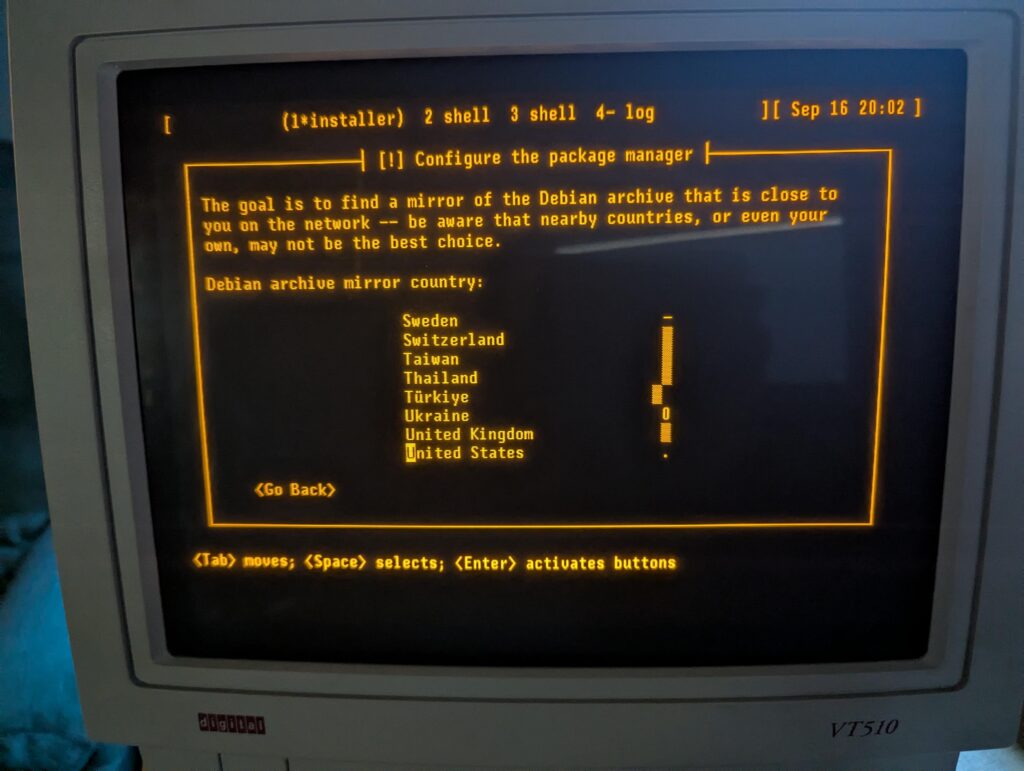

![Welcome to the Linguistics Department - It has been [2] [DAYS] since someone noticed that the Biology Department sign has a one-day-long singular/plural disagreement after it resets. Welcome to the Linguistics Department - It has been [2] [DAYS] since someone noticed that the Biology Department sign has a one-day-long singular/plural disagreement after it resets.](https://imgs.xkcd.com/comics/biology_department.png)