Think of the Web as a digital territory with its own social contract. In 2014, Tim Berners-Lee called for a “Magna Carta for the Web” to restore the balance of power between individuals and institutions. This mirrors the original charter’s purpose: ensuring that those who occupy a territory have a meaningful stake in its governance.

Web 3.0—the distributed, decentralized Web of tomorrow—is finally poised to change the Internet’s dynamic by returning ownership to data creators. This will change many things about what’s often described as the “CIA triad” of digital security: confidentiality, integrity, and availability. Of those three features, data integrity will become of paramount importance.

When we have agency in digital spaces, we naturally maintain their integrity—protecting them from deterioration and shaping them with intention. But in territories controlled by distant platforms, where we’re merely temporary visitors, that connection frays. A disconnect emerges between those who benefit from data and those who bear the consequences of compromised integrity. Like homeowners who care deeply about maintaining the property they own, users in the Web 3.0 paradigm will become stewards of their personal digital spaces.

This will be critical in a world where AI agents don’t just answer our questions but act on our behalf. These agents may execute financial transactions, coordinate complex workflows, and autonomously operate critical infrastructure, making decisions that ripple through entire industries. As digital agents become more autonomous and interconnected, the question is no longer whether we will trust AI but what that trust is built upon. In the new age we’re entering, the foundation isn’t intelligence or efficiency—it’s integrity.

What Is Data Integrity?

In information systems, integrity is the guarantee that data will not be modified without authorization, and that all transformations are verifiable throughout the data’s life cycle. While availability ensures that systems are running and confidentiality prevents unauthorized access, integrity focuses on whether information is accurate, unaltered, and consistent across systems and over time.

It’s a new idea. The undo button, which prevents accidental data loss, is an integrity feature. So is the reboot process, which returns a computer to a known good state. Checksums are an integrity feature; so are verifications of network transmission. Without integrity, security measures can backfire. Encrypting corrupted data just locks in errors. Systems that score high marks for availability but spread misinformation just become amplifiers of risk.

All IT systems require some form of data integrity, but the need for it is especially pronounced in two areas today. First: Internet of Things devices interact directly with the physical world, so corrupted input or output can result in real-world harm. Second: AI systems are only as good as the integrity of the data they’re trained on, and the integrity of their decision-making processes. If that foundation is shaky, the results will be too.

Integrity manifests in four key areas. The first, input integrity, concerns the quality and authenticity of data entering a system. When this fails, consequences can be severe. In 2021, Facebook’s global outage was triggered by a single mistaken command—an input error missed by automated systems. Protecting input integrity requires robust authentication of data sources, cryptographic signing of sensor data, and diversity in input channels for cross-validation.

The second issue is processing integrity, which ensures that systems transform inputs into outputs correctly. In 2003, the U.S.-Canada blackout affected 55 million people when a control-room process failed to refresh properly, resulting in damages exceeding US $6 billion. Safeguarding processing integrity means formally verifying algorithms, cryptographically protecting models, and monitoring systems for anomalous behavior.

Storage integrity covers the correctness of information as it’s stored and communicated. In 2023, the Federal Aviation Administration was forced to halt all U.S. departing flights because of a corrupted database file. Addressing this risk requires cryptographic approaches that make any modification computationally infeasible without detection, distributed storage systems to prevent single points of failure, and rigorous backup procedures.

Finally, contextual integrity addresses the appropriate flow of information according to the norms of its larger context. It’s not enough for data to be accurate; it must also be used in ways that respect expectations and boundaries. For example, if a smart speaker listens in on casual family conversations and uses the data to build advertising profiles, that action would violate the expected boundaries of data collection. Preserving contextual integrity requires clear data-governance policies, principles that limit the use of data to its intended purposes, and mechanisms for enforcing information-flow constraints.

As AI systems increasingly make critical decisions with reduced human oversight, all these dimensions of integrity become critical.

The Need for Integrity in Web 3.0

As the digital landscape has shifted from Web 1.0 to Web 2.0 and now evolves toward Web 3.0, we’ve seen each era bring a different emphasis in the CIA triad of confidentiality, integrity, and availability.

Returning to our home metaphor: When simply having shelter is what matters most, availability takes priority—the house must exist and be functional. Once that foundation is secure, confidentiality becomes important—you need locks on your doors to keep others out. Only after these basics are established do you begin to consider integrity, to ensure that what’s inside the house remains trustworthy, unaltered, and consistent over time.

Web 1.0 of the 1990s prioritized making information available. Organizations digitized their content, putting it out there for anyone to access. In Web 2.0, the Web of today, platforms for e-commerce, social media, and cloud computing prioritize confidentiality, as personal data has become the Internet’s currency.

Somehow, integrity was largely lost along the way. In our current Web architecture, where control is centralized and removed from individual users, the concern for integrity has diminished. The massive social media platforms have created environments where no one feels responsible for the truthfulness or quality of what circulates.

Web 3.0 is poised to change this dynamic by returning ownership to the data owners. This is not speculative; it’s already emerging. For example, ActivityPub, the protocol behind decentralized social networks like Mastodon, combines content sharing with built-in attribution. Tim Berners-Lee’s Solid protocol restructures the Web around personal data pods with granular access controls.

These technologies prioritize integrity through cryptographic verification that proves authorship, decentralized architectures that eliminate vulnerable central authorities, machine-readable semantics that make meaning explicit—structured data formats that allow computers to understand participants and actions, such as “Alice performed surgery on Bob”—and transparent governance where rules are visible to all. As AI systems become more autonomous, communicating directly with one another via standardized protocols, these integrity controls will be essential for maintaining trust.

Why Data Integrity Matters in AI

For AI systems, integrity is crucial in four domains. The first is decision quality. With AI increasingly contributing to decision-making in health care, justice, and finance, the integrity of both data and models’ actions directly impact human welfare. Accountability is the second domain. Understanding the causes of failures requires reliable logging, audit trails, and system records.

The third domain is the security relationships between components. Many authentication systems rely on the integrity of identity information and cryptographic keys. If these elements are compromised, malicious agents could impersonate trusted systems, potentially creating cascading failures as AI agents interact and make decisions based on corrupted credentials.

Finally, integrity matters in our public definitions of safety. Governments worldwide are introducing rules for AI that focus on data accuracy, transparent algorithms, and verifiable claims about system behavior. Integrity provides the basis for meeting these legal obligations.

The importance of integrity only grows as AI systems are entrusted with more critical applications and operate with less human oversight. While people can sometimes detect integrity lapses, autonomous systems may not only miss warning signs—they may exponentially increase the severity of breaches. Without assurances of integrity, organizations will not trust AI systems for important tasks, and we won’t realize the full potential of AI.

How to Build AI Systems With Integrity

Imagine an AI system as a home we’re building together. The integrity of this home doesn’t rest on a single security feature but on the thoughtful integration of many elements: solid foundations, well-constructed walls, clear pathways between rooms, and shared agreements about how spaces will be used.

We begin by laying the cornerstone: cryptographic verification. Digital signatures ensure that data lineage is traceable, much like a title deed proves ownership. Decentralized identifiers act as digital passports, allowing components to prove identity independently. When the front door of our AI home recognizes visitors through their own keys rather than through a vulnerable central doorman, we create resilience in the architecture of trust.

Formal verification methods enable us to mathematically prove the structural integrity of critical components, ensuring that systems can withstand pressures placed upon them—especially in high-stakes domains where lives may depend on an AI’s decision.

Just as a well-designed home creates separate spaces, trustworthy AI systems are built with thoughtful compartmentalization. We don’t rely on a single barrier but rather layer them to limit how problems in one area might affect others. Just as a kitchen fire is contained by fire doors and independent smoke alarms, training data is separated from the AI’s inferences and output to limit the impact of any single failure or breach.

Throughout this AI home, we build transparency into the design: The equivalent of large windows that allow light into every corner is clear pathways from input to output. We install monitoring systems that continuously check for weaknesses, alerting us before small issues become catastrophic failures.

But a home isn’t just a physical structure, it’s also the agreements we make about how to live within it. Our governance frameworks act as these shared understandings. Before welcoming new residents, we provide them with certification standards. Just as landlords conduct credit checks, we conduct integrity assessments to evaluate newcomers. And we strive to be good neighbors, aligning our community agreements with broader societal expectations. Perhaps most important, we recognize that our AI home will shelter diverse individuals with varying needs. Our governance structures must reflect this diversity, bringing many stakeholders to the table. A truly trustworthy system cannot be designed only for its builders but must serve anyone authorized to eventually call it home.

That’s how we’ll create AI systems worthy of trust: not by blindly believing in their perfection but because we’ve intentionally designed them with integrity controls at every level.

A Challenge of Language

Unlike other properties of security, like “available” or “private,” we don’t have a common adjective form for “integrity.” This makes it hard to talk about it. It turns out that there is a word in English: “integrous.” The Oxford English Dictionary recorded the word used in the mid-1600s but now declares it obsolete.

We believe that the word needs to be revived. We need the ability to describe a system with integrity. We must be able to talk about integrous systems design.

The Road Ahead

Ensuring integrity in AI presents formidable challenges. As models grow larger and more complex, maintaining integrity without sacrificing performance becomes difficult. Integrity controls often require computational resources that can slow systems down—particularly challenging for real-time applications. Another concern is that emerging technologies like quantum computing threaten current cryptographic protections. Additionally, the distributed nature of modern AI—which relies on vast ecosystems of libraries, frameworks, and services—presents a large attack surface.

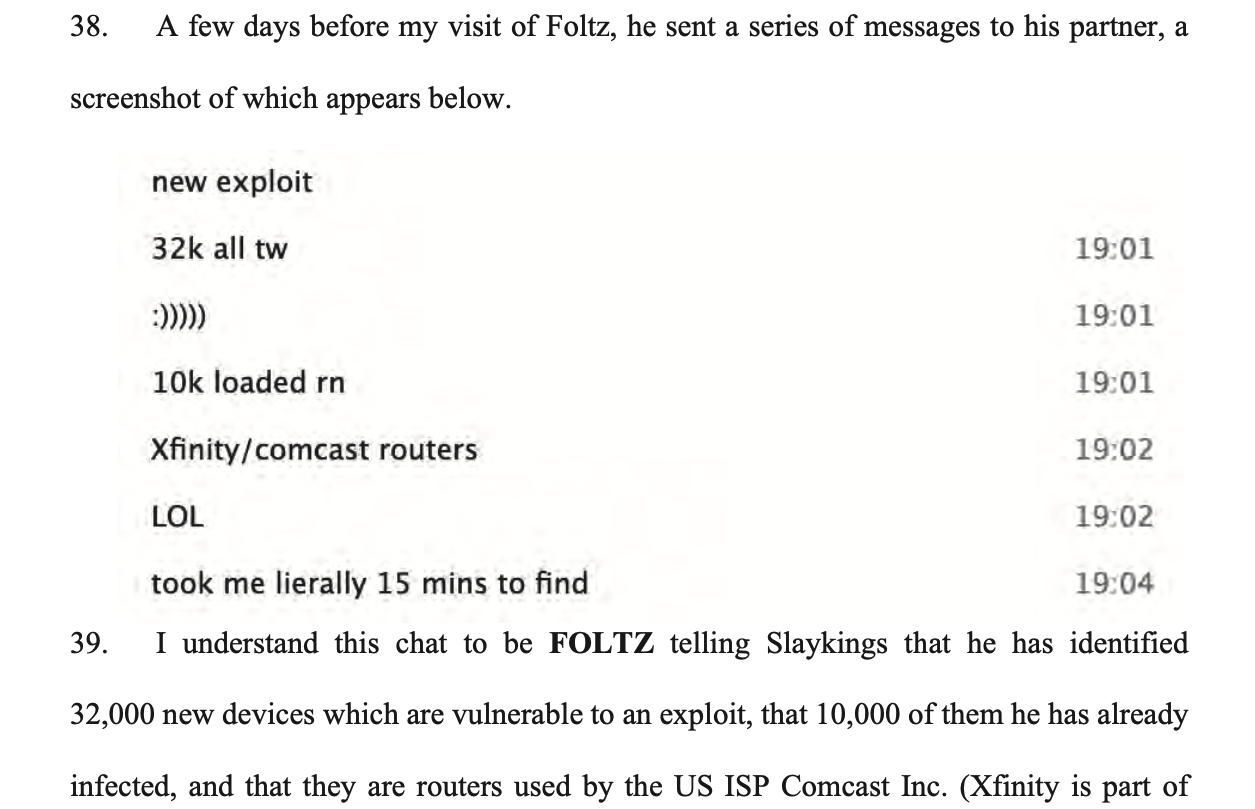

Beyond technology, integrity depends heavily on social factors. Companies often prioritize speed to market over robust integrity controls. Development teams may lack specialized knowledge for implementing these controls, and may find it particularly difficult to integrate them into legacy systems. And while some governments have begun establishing regulations for aspects of AI, we need worldwide alignment on governance for AI integrity.

Addressing these challenges requires sustained research into verifying and enforcing integrity, as well as recovering from breaches. Priority areas include fault-tolerant algorithms for distributed learning, verifiable computation on encrypted data, techniques that maintain integrity despite adversarial attacks, and standardized metrics for certification. We also need interfaces that clearly communicate integrity status to human overseers.

As AI systems become more powerful and pervasive, the stakes for integrity have never been higher. We are entering an era where machine-to-machine interactions and autonomous agents will operate with reduced human oversight and make decisions with profound impacts.

The good news is that the tools for building systems with integrity already exist. What’s needed is a shift in mind-set: from treating integrity as an afterthought to accepting that it’s the core organizing principle of AI security.

The next era of technology will be defined not by what AI can do, but by whether we can trust it to know or especially to do what’s right. Integrity—in all its dimensions—will determine the answer.

Sidebar: Examples of Integrity Failures

Ariane 5 Rocket (1996)

Processing integrity failure

A 64-bit velocity calculation was converted to a 16-bit output, causing an error called overflow. The corrupted data triggered catastrophic course corrections that forced the US $370 million rocket to self-destruct.

NASA Mars Climate Orbiter (1999)

Processing integrity failure

Lockheed Martin’s software calculated thrust in pound-seconds, while NASA’s navigation software expected newton-seconds. The failure caused the $328 million spacecraft to burn up in the Mars atmosphere.

Microsoft’s Tay Chatbot (2016)

Processing integrity failure

Released on Twitter, Microsoft‘s AI chatbot was vulnerable to a “repeat after me” command, which meant it would echo any offensive content fed to it.

Boeing 737 MAX (2018)

Input integrity failure

Faulty sensor data caused an automated flight-control system to repeatedly push the airplane’s nose down, leading to a fatal crash.

SolarWinds Supply-Chain Attack (2020)

Storage integrity failure

Russian hackers compromised the process that SolarWinds used to package its software, injecting malicious code that was distributed to 18,000 customers, including nine federal agencies. The hack remained undetected for 14 months.

ChatGPT Data Leak (2023)

Storage integrity failure

A bug in OpenAI’s ChatGPT mixed different users’ conversation histories. Users suddenly had other people’s chats appear in their interfaces with no way to prove the conversations weren’t theirs.

Midjourney Bias (2023)

Contextual integrity failure

Users discovered that the AI image generator often produced biased images of people, such as showing white men as CEOs regardless of the prompt. The AI tool didn’t accurately reflect the context requested by the users.

Prompt Injection Attacks (2023–)

Input integrity failure

Attackers embedded hidden prompts in emails, documents, and websites that hijacked AI assistants, causing them to treat malicious instructions as legitimate commands.

CrowdStrike Outage (2024)

Processing integrity failure

A faulty software update from CrowdStrike caused 8.5 million Windows computers worldwide to crash—grounding flights, shutting down hospitals, and disrupting banks. The update, which contained a software logic error, hadn’t gone through full testing protocols.

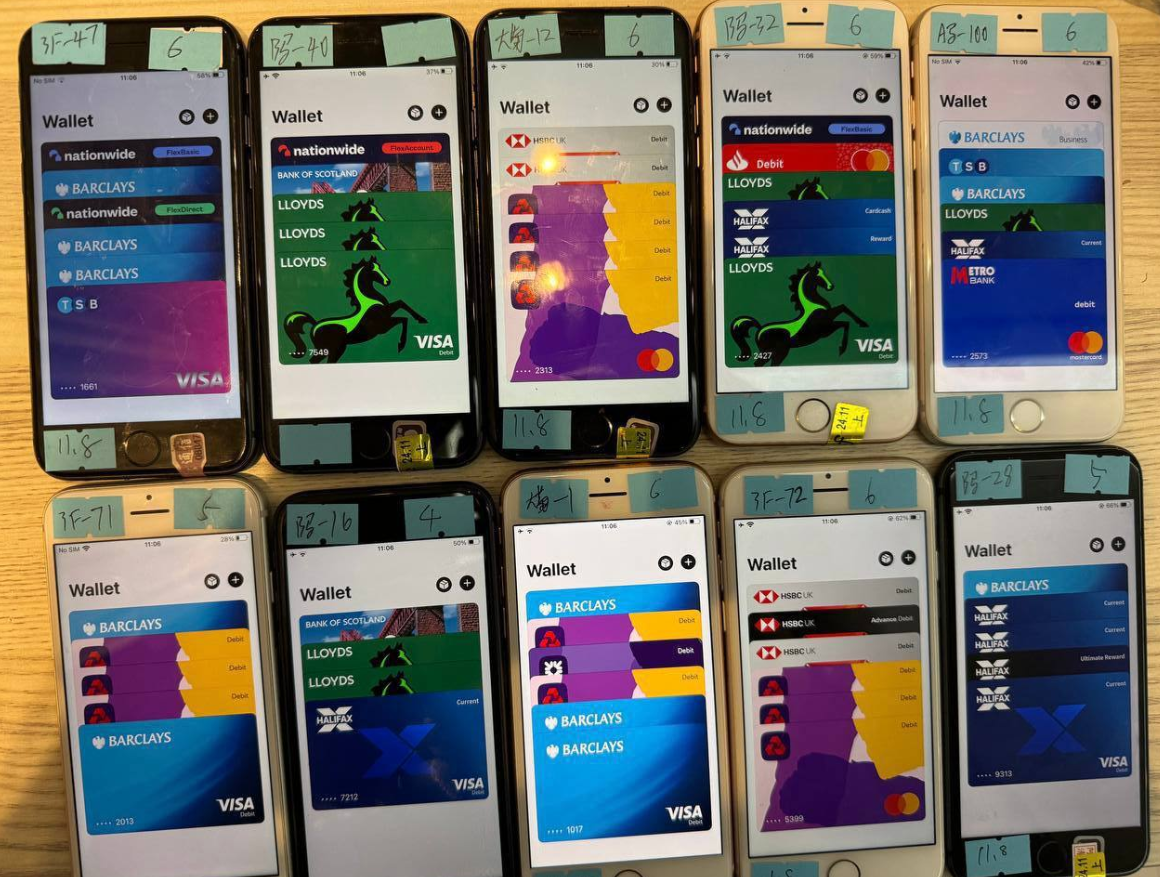

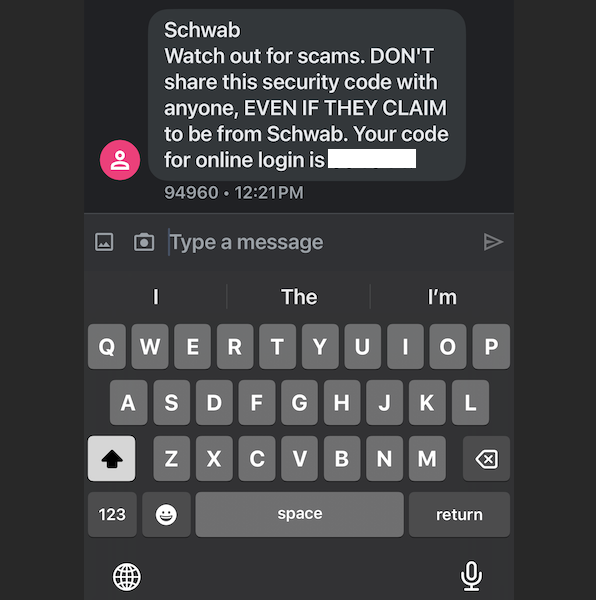

Voice-Clone Scams (2024)

Input and processing integrity failure

Scammers used AI-powered voice-cloning tools to mimic the voices of victims’ family members, tricking people into sending money. These scams succeeded because neither phone systems nor victims identified the AI-generated voice as fake.

This essay was written with Davi Ottenheimer, and originally appeared in IEEE Spectrum.

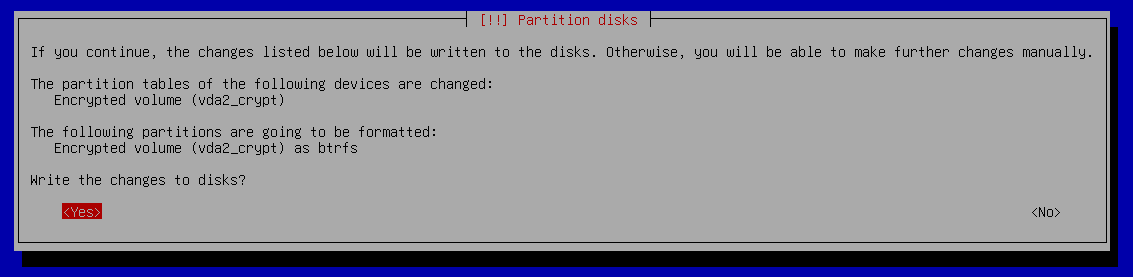

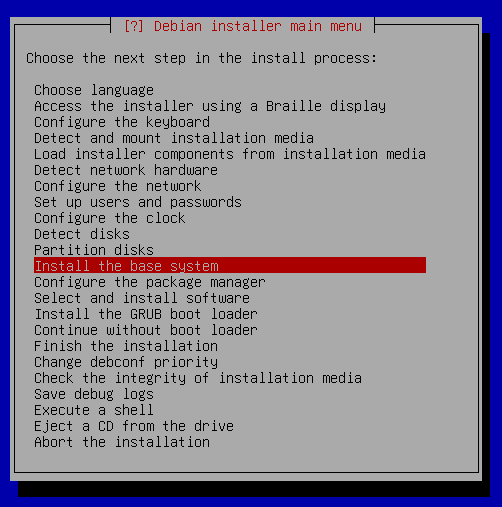

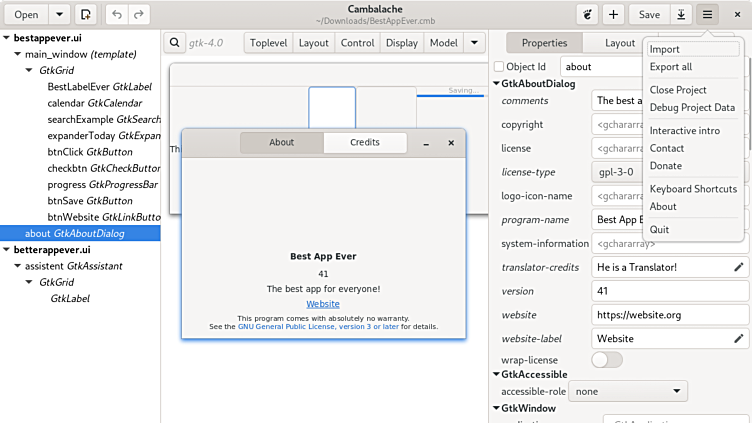

Changing Chrome Remote Desktop desktop size in GCP Windows.

When I connect to GCP Windows hosts with default configuration I get 640x480 desktop.

Enabling display device in the device configuration enables resizing from Windows.

Changing Chrome Remote Desktop desktop size in GCP Windows.

When I connect to GCP Windows hosts with default configuration I get 640x480 desktop.

Enabling display device in the device configuration enables resizing from Windows.

showing 2MiB RAM](https://jmtd.net/log/amiga/redux/256x-2mb.jpg)

There's a lovely device called a

There's a lovely device called a  Partners holding big jigsaw puzzle pieces flat vector illustration. Successful partnership, communication and collaboration metaphor. Teamwork and business cooperation concept.

Partners holding big jigsaw puzzle pieces flat vector illustration. Successful partnership, communication and collaboration metaphor. Teamwork and business cooperation concept.