Introduction

“One of the peculiar things about the 'Net is it has no memory. (…) We’ve made our digital bet. Civilization now happens digitally. And it has no memory. This is no way to run a civilization. And the Web — its reach is great, but its depth is shallower than any other medium probably we’ve ever lived with.” (Kahle 01998)

In February 01998, the Getty Center in Los Angeles hosted the Time and Bits: Managing Digital Continuity conference, organized by the founders and thinkers of two non-profit organizations established two years earlier in San Francisco: the Internet Archive and The Long Now Foundation, both dedicated to long-term thinking and archiving.

The Internet Archive has since become a global example of digital archiving and an open library that provides access to millions of digitized pages from the web and paper books on its website. The Long Now Foundation’s central project involves the design and construction of a monumental clock intended to tick for the next 10,000 years, promoting long-term thinking alongside its lesser-known archival mission: the Rosetta Project.

The Time and Bits: Managing Digital Continuity conference brought together these organizations to discuss what the Internet Archive’s founder, Brewster Kahle, referred to as “our digital bet”: a digital-only novel form of civilization with no history, posing challenges to the preservation of its immaterial cultural memory. Discussions at the conference raised concerns about the longevity of digital formats and explored potential archival and transmission solutions for the future. This foresight considered the ‘heritage’ characteristics of the digital world, which were yet to be defined as such on a global level. It was not until 02003 that UNESCO published a charter advocating for “digital heritage conservation”, distinguishing between “digital-born heritage” and digitized heritage (UNESCO 02003, Musiani et al. 02019). The Getty Center conference thus emerges as a precursor in the quest to preserve both digital data and analog information for future generations. This endeavor, the chapter argues, aligns with the longue durée, a conception of time and history developed by French historian Fernand Braudel during World War Two (Braudel 01958).

While the Internet Archive has envisioned such a mission through the continuous digital recording of web pages and the digitization of paper, sound, and video documents into bits format, The Long Now Foundation’s Rosetta Project began to take shape during the ‘Time and Bits’ conversations, offering a different approach to data conservation in an analog microscopic format, engraved on nickel disks.

Taking the 01998 gathering of the Internet Archive and Long Now Foundation as a starting point, this chapter aims to examine the challenges and strategies of ‘digital continuity management’ (or maintenance). It proposes to analyze the different ways these two case studies envision archiving and transmission to future generations, in both digital and analog formats — bits and nickel, respectively — for virtual web content and physical paper-based materials.

Through a comparative analysis of these two non-profit organizations, this chapter seeks to explore various archiving methods and tools, and the challenges they present in terms of time, space, innovation, maintenance, and ‘continuity’. By depicting two distinct visions of the future of archiving represented by these organizations, it highlights their shared mission of safeguarding, sharing, and providing universal access to information, despite their differing formats.

The method used for this analysis combines theoretical, comparative, and qualitative studies through an immersive research process spanning over three years in the San Francisco Bay Area. This process involved conducting interviews and participant observations during seminars, talks, and meetings held by both The Long Now Foundation and at the Internet Archive. Additionally, archival research was conducted online, using resources such as the Internet Archive’s Wayback Machine and The Long Now Foundation’s blog dating back to 01996, as well as on-site at The Long Now Foundation.

This chapter adopts a multidisciplinary approach conjoining history, media, maintenance, and American studies to analyze the challenges faced by these two organizations in transmitting both material and intangible cultural heritage (UNESCO 02003).

The first part of this chapter concentrates on the future of archives and their longevity, a topic that was discussed during the 01998 Time and Bits conference. It suggests a parallel with the Braudelian longue durée perspective, which offers a novel understanding of time and history.

The second part focuses on digital transmission in ‘hard-drive form’ using the example of the Internet Archive and its Wayback Machine, comprising thousands of hard drives. The final segment of this chapter discusses the analog archival format chosen by The Long Now Foundation, represented by the Rosetta Disk, a small nickel disk engraved with thousands of pages of selected texts. This format is likened to a modern iteration of the Egyptian Rosetta Stone for preservation into the long-term future.

“Time and Bits: Managing Digital Continuity”…and maintenance in longue durée

“How long can a digital document remain intelligible in an archive?” This question, asked by futurist Jaron Lanier in one of the hundreds of messages posted on the Time and Bits forum that ran from October 01997 until June 01998, underscores not only concerns about the future ‘life’ of digital documents at the end of the 01990s, but also their meaning and understanding in archives for future generations. These concerns about digital preservation were central to discussions at the subsequent Time and Bits conference organized a few months later in February 01998 at the Getty Center in Los Angeles by the Getty Conservation Institute and the now defunct Getty Information Institute, in collaboration with The Long Now Foundation.

The Long Now Foundation, formed in 01996, emerged from discussions among thinkers and futurists who later became its board members. This group included Stewart Brand, recipient of the 01971 National Book Award for his Whole Earth Catalog and co-founder of the pioneering virtual community, the WELL, created in the 01980s (Turner 02006), engineer Danny Hillis, British musician and artist Brian Eno, technologists Esther Dyson and Kevin Kelly, and futurist Peter Schwartz (Momméja 02021). Schwartz, in particular, is the one who articulated the concept of the ‘long now’ as a span of 20,000 years — 10,000 years deep into the past and 10,000 years into the very distant future. This timeframe coincides with the envisioned lifespan of the monumental Clock being constructed by the foundation in West Texas. The choice of this specific duration marks the end of the last ice age about 10,000 years ago, a period that catalyzed the advent of agriculture and human civilization, with some scholars even identifying it as the onset of the Anthropocene epoch. Indeed, a group of scientists extends their analysis beyond the industrial era, which has generally been studied as the beginning of this human-induced transformation of our biosphere, considering the origins of agriculture as “the time when large-scale transformation of land use and human-induced species and ecosystem loss extended the period of warming after the end of the Pleistocene” (Henke and Sims 02020). For the founders of The Long Now Foundation, this 10,000-year perspective must therefore be developed in the opposite direction, towards the future (hence the expected duration of the Clock) forming the ‘Long Now’.

The paper argues that ‘long now’ promoted by the organization can be paralleled with the concept of longue durée put forth by Annales historian Fernand Braudel. Braudel began elaborating the idea of longue durée during his time as prisoner of war in Germany. For five years, he diligently worked on his PhD dissertation, La Méditerranée et le monde méditerranéen à l'époque de Philippe II (Braudel 01949). It was during his internment that Braudel developed the concept of the ‘very long time’, a temporal construction that provided him solace from the traumatic events he experienced in the ‘short time’ and helped him gain insight into his condition by situating them on a much broader time scale (Braudel 01958). With newly stratified temporalities ― from the immediate to the medium to the very long term ― Braudel succeeded in escaping the space-time of which he was a prisoner, a ‘here’ and ‘now’ devoid of meaningful perspectives when a longer ‘now’ would liberate him from the present moment. Longue durée was thus imagined as a novel long-term approach to history, diverging from traditional narratives that focused on brief periods and dramatic events, such as wars. This is what Braudel referred to as “a rushed, dramatic narrative” (Braudel 01958). A second, longer type of history, based on economic cycles and conjunctures, was described by Braudel as spanning several decades, while longue durée offered a novel type of history that transcended events and cycles, extending even further to encompass centuries ― although the French historian refrained from specifying an exact timeframe.

Longue durée, alongside its modern Californian counterpart, the ‘long now’, prompts us to reconsider our understanding of history in time as a means to encapsulate events far beyond our lifetimes. Braudel insisted historians should incorporate longue durée into their work and rethink history as an ‘infrastructure’ composed of layers of ‘slow history’.

Given this perspective, how can we archive and transmit fast traditional history within the context of longue durée? In his foreword to the Time & Bits report, Barry Munitz, president and CEO of the J. Paul Getty Trust, explained the initiative behind the conference:

We take seriously the notion of long-term responsibility in the protection of important cultural information, which in many cases now is recorded only in digital formats. The technology that enables digital conversion and access is a marvel that is evolving at lightning speed. Lagging far behind, however, are the means by which the digital legacy will be preserved over the long term (Munitz 01998).

The two organizations selected for this chapter offer two distinct, yet complementary, visions of how archiving and transmitting should be approached, now and for the longue durée, in digital and analog formats.

Addressing the “problem of our vanishing memory” was a focal point of the Time & Bits conference encapsulated by Internet Archive founder Brewster Kahle’s question: “I think the issue that we are grappling with here is now that our cultural artifacts are in digital form, what happens?” (Kahle 01998). As noted by Stewart Brand, Kahle also pointed out that “one of the peculiar things about the 'Net is it has no memory. (…) We’ve made our digital bet. Civilization now happens digitally. And it has no memory. This is no way to run a civilization. And the Web—its reach is great, but its depth is shallower than any other medium probably we’ve ever lived with” (Kahle 01998).

As a way to resolve this ‘digital bet’ and the pressing need for ‘digital continuity’, Brewster Kahle embarked on a mission to archive the web on a massive scale, giving rise to the Internet Archive and its Wayback Machine: an archive comprising 20,000 hard drives and containing 866 billion web pages as of March 02024.

Like The Long Now Foundation, the Internet Archive is a non-profit organization founded in 01996 in San Francisco. In fact, both entities once occupied adjacent offices in the Presidio. Their missions can also be put in parallel: whereas The Long Now Foundation promotes long-term thinking through projects like the construction of a Clock and the preservation of foundational languages and texts of our civilization in analog form through the Rosetta Disk, the Internet Archive digitizes and archives analog documents and records digital textual heritage through its Wayback Machine.

The Internet Archive embarked on its mission with an imperative to save internet pages, immaterial data composed of bits, which had not previously been archived: “We began in 01996 by archiving the internet itself, a medium that was just beginning to grow in use. Like newspapers, the content published on the web was ephemeral ― but unlike newspapers, no one was saving it” (Internet Archive 02024). Despite the transient and intangible nature of web pages, the Internet Archive remains committed to this mission, continuing to archive internet pages in a digital format to this day, with the ambition to remain open and collaborative, “explicitly promoting bottom-up initiatives intended to revalue human intervention” (Musiani et al. 02019).

Brewster Kahle, who could be regarded as the first digital librarian in history, promotes “Universal Access to All Knowledge” and “Building Libraries Together”. These missions, as explained during the Internet Archive's annual celebration on October 21, 02015, at its headquarters in San Francisco, highlight the organization’s commitment to a wide array of digital content, including internet pages, books, videos, music, and games. Therefore, the internet appears as a “heritage and museographic object” (Schafer 02012), with information worth saving and protecting for the future. While the Library of Congress recently acknowledged the significance of Twitter content as a form of heritage (Schafer 02012), the Internet Archive has been standing as an advocate for the preservation and transmission of digital heritage as early as the 01990s. UNESCO further validated this recognition in 02003 by acknowledging the existence of “digital heritage as a common heritage” through a charter on the conservation of digital heritage (Musiani et al. 02019) where resources are ‘born digital’, before being, or even without ever being, analog:

Digital materials encompass a vast and growing range of formats, including texts, databases, still and moving images, audio, graphics, software, and web pages. Often ephemeral in nature, they require purposeful production, maintenance, and management to be retained. Many of these resources possess lasting value and significance, constituting a heritage that merits protection and preservation for current and future generations. This ever growing heritage may exist in any language, in any part of the world, and in any area of human knowledge or expression (UNESCO 02003).

The Internet Archive’s mission aligns perfectly with this definition, providing open access to documents that are "protected and preserved for current and future generations”, echoing once again The Long Now Foundation’s own mission. However, the pursuit of "universal access to all knowledge" raises questions about the quality or "representativeness of the archive" (Musiani et al. 02019) in the face of the abundance and diversity of the sources and formats available.

For instance, the music section of the Internet Archive connects visitors to San Francisco’s local counterculture history with a vast collection of recordings from Grateful Dead shows (17,453 items) that fans contributed to the organization in analog formats for digitization. This exchange has not only allowed the band’s fan community to flourish but has also bolstered the group’s the popularity: “they started to record all those concerts and you know, there are I think 2,339 concerts that got played by the Grateful Dead (…) and all but 300 of those are here in the archive” (Barlow 02015). In this way, the Internet Archive confirms its role as a universal collaborative platform and effectively contributes to a “new era of cultural participation” (Severo and Thuillas 02020), one that is proper to Web 2.0 but which the non-profit has been championing since the 01990s.

However, for the Internet Archive, and digital technology in general, to truly guarantee the archiving of human heritage ‘for future generations’ over the years, whether initially analog or digital, it is imperative to continuously improve and update storage formats and units to combat obsolescence and adapt to evolving technologies:

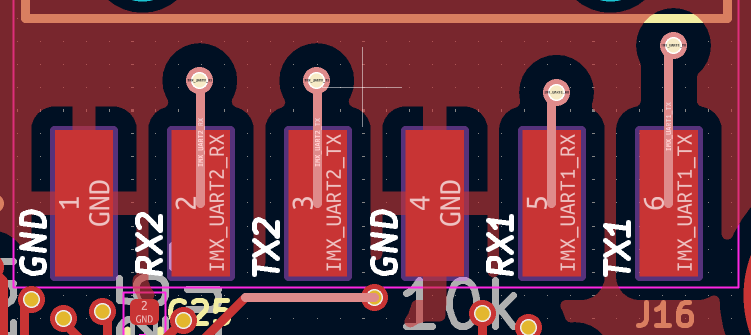

Of course, disk drives all eventually fail. So we have an active team that monitors drive health and replaces drives showing early signs for failure. We replaced 2,453 drives in 02015, and 1,963 year-to-date 02016… an average of 6.7 drives per day. Across all drives in the cluster the average ‘age’ (arithmetic mean of the time in-service) is 779 days. The median age is 730 days, and the most tenured drive in our cluster has been in continuous use for 6.85 years! (Gonzalez 02016)

If “all contributions produced on these platforms, whether amateur or professional, participate in the construction and appropriation of cultural and memorial heritage” (Severo and Thuillas 02020), reliance solely on digital technology poses a substantial challenge to the preservation of our cultures in longue durée. Aware of the inherent risks associated with archiving both analog and ‘digital heritage’ on storage mediums with limited lifespans, the Internet Archive must make the maintenance and replacement of the hard drives that comprise its Wayback Machine a constant priority.

From stone to disk: the Rosetta Project through time and space

To embody Braudel’s notion of ‘slow history’ and foster long-term thinking among people, The Long Now Foundation envisioned not only a monumental Clock as a time relay for future generations, but also a library for the deep future, soon materializing as an engraved artifact: The Rosetta Disk.

As explained by technologist Kevin Kelly, the concept of a miniature storage system comprising 350,000 pages of text engraved on a nickel disk, measuring just under eight centimeters in diameter, was proposed by Kahle during the Time and Bits: Managing Digital Continuity conference, “as a solution for long-term digital storage (…) with an estimated lifespan of 2,000–10,000 years” (Kelly 02008). These meeting discussions thus led to the emergence of the Rosetta Project within The Long Now Foundation, drawing inspiration from the Rosetta Stone. The final version of the Rosetta Project’s Disk was unveiled in 02008: 14,000 pages of information in 1,500 different languages (Welcher 02008). Crafted in analog format, it was conceived as the solution to the ever-changing landscape of digital technologies.

While the Internet Archive possesses infinite possibilities for archiving, The Long Now Foundation’s analog choice demands a thoughtful selection of texts to be micro-engraved onto the disk. The foundation decided to focus on several texts, both symbolic and universalist, such as the 01948 Universal Declaration of Human Rights, along with Genesis, chosen for its numerous translations. Materials with a linguistic or grammatical vocation, such as the Swadesh list — a compendium of words establishing a basic lexicon for each language — were included, as well as grammatical information including descriptions of phonetics, word formation, and broader linguistic structures like sentences.

Unlike Kahle's digital and digitized heritage project, the Foundation’s language archive is exclusively engraved, accessible only through a microscope. Such an archive is thus a finite heritage, with no scope for future development beyond the creation of new disks displaying new texts. While the Internet Archive and its Wayback Machine are constantly evolving, updated through constant digitization and the preservation of new web pages, the format and size of the nickel disk remain immutable.

To ensure the long-term survival of this archive, the foundation has embraced the “LOCKSS” principle — Lots of Copies Keep Stuff Safe — and has opted to duplicate its Rosetta Disk. By distributing these duplicates worldwide, the project stands a greater chance of lasting in longue durée: “this project in long-term thinking would do two things: it would showcase this new long-term storage technology, and it would give the world a minimal backup of human languages” (Kelly 02008).

The final version of the Rosetta Disk, containing 14,000 micro-engraved pages, was presented at the Foundation's headquarters in 02008. “Kept in its protective sphere to avoid scratches, it could easily last and be read 2,000 years into the future” (Welcher 02008). Beyond its resilience within the timeline of the Long Now, the analog Rosetta Disk aspires to endure across space as well. Remarkably, as the Foundation had been developing its project since 01999, they were contacted by the European Space Agency (ESA) and the Rosetta Mission team which, coincidentally, was working on the launch of an exploratory space probe aptly named Rosetta. The Rosetta probe was launched on March 2, 02004, aboard an Ariane 5G+ rocket from Kourou, with the mission of studying comet 67P/Churyumov-Gerasimenko (‘Tchouri’) located near Jupiter. On board the probe was the very first version of the Rosetta Disk, less comprehensive than the version unveiled in 02008, nevertheless containing six thousand pages of translated texts.

Conclusion

On November 12, 02014, over a decade after its departure from Earth, the Rosetta probe finally reached Comet Tchouri. Upon arrival, it deployed its Philae lander onto the comet’s surface, where, despite unexpected rebounds, it eventually stabilized itself to conduct programmed analyses. Nearly two years later, on September 30, 02016, the Rosetta module, with the Rosetta Disk on board, joined Philae on Tchouri, thus marking the conclusion of the mission: “With Rosetta we are opening a door to the origin of planet Earth and fostering a better understanding of our future. ESA and its Rosetta mission partners have achieved something extraordinary today” (ESA 02014). Through a space mission focused on the future with the aim of better understanding the Earth's past, the Rosetta Disk fulfilled its project to become an archive in longue durée, transcending temporal and spatial boundaries.

Almost ten years later, both the Rosetta Disk and the Internet Archive, through a selection of books and documents from its datasets, became part of an even larger spatial archive which also includes articles from Wikipedia and books from Project Gutenberg, all etched on thin sheets of nickel. The Arch Mission Foundation’s Lunar Library successfully landed on the Moon on February 22, 02024, thus reuniting for the first time the two non-profits’ archival materials in a cultural and civilizational preservation project, built to remain on the Moon surface throughout the longue durée.

The Time and Bits: Managing Digital Continuity conference did not present a single solution to the challenges of digital archives and data transmission. Instead, it offered a range of options and tools for web archives, digital data, and analog documents to address our ‘digital bet’. The two cases presented appear as two faces of the same disk — digital and analog — with a shared conservation objective: providing different means to consider longue durée and ensure archival continuity and maintenance in the long term. This continuity extends not only through time, but also across space, placing “digitally-born heritage” (Musiani et al. 02019) and more traditional forms of heritage on equal footing.

From the “creative city” (Florida 02002) of San Francisco, both organizations have managed to extend the boundaries of the “creative Frontier” (Momméja 02001), not only physically and digitally, but also through longue durée and space. From hard drives to disks, they offer a new form of coevolution between humans and machines, a ‘post-coevolution’ aimed at transmitting our cultural heritage to future generations through bits and nickel.

References

Brand, Stewart. 01999. The Clock of The Long Now: Time and Responsibility. New York: BasicBooks.

The European Space Agency. 02002. “Rosetta Disk Goes Back to the Future.” The European Space Agency. December 3.

https://web.archive.org/web/20240423005130/https://sci.esa.int/web/rosetta/-/31242-rosetta-disk-goes-back-to-the-future.

———. n.d. “Enabling & Support – Rosetta.” The European Space Agency. https://web.archive.org/web/20240423005544/https://www.esa.int/Enabling_Support/Operations/Rosetta.

———. n.d. “Rosetta – Summary.” The European Space Agency. https://web.archive.org/web/20240423004357/https://sci.esa.int/web/rosetta/2279-summary.

———. n.d. “Where Is Rosetta?” The European Space Agency. https://web.archive.org/web/20240423003218/https://sci.esa.int/where_is_rosetta/.

Florida, Richard. 02002. The Rise of the Creative Class: And How It’s Transforming Work, Leisure, Community and Everyday Life. New York, NY: Basic Books.

Gonzalez, John. 02016. “20,000 Hard Drives on a Mission.” Internet Archive Blogs. October 25. https://web.archive.org/web/20240423002926/https://blog.archive.org/2016/10/25/20000-hard-drives-on-a-mission/.

Henke, Christopher R, and Benjamin Sims. 02020. Repairing Infrastructures the Maintenance of Materiality and Power. https://web.archive.org/web/20240423002248/https://direct.mit.edu/books/oamonograph/4962/Repairing-InfrastructuresThe-Maintenance-of.

Internet Archive. 02015. “Building Libraries Together, Celebrating the Passionate People Building the Internet Archive.” Internet Archive, San Francisco, October 21. https://archive.org/details/buildinglibrariestogether2015.

Internet Archive. 02024. “About the Internet Archive.” Internet Archive. https://web.archive.org/web/20240423001744/https://archive.org/about/.

Kahle, Brewster. 02011. “Universal Access to All Knowledge.” San Francisco, November 30. https://web.archive.org/web/20240423001555/https://longnow.org/seminars/02011/nov/30/universal-access-all-knowledge/.

Kahle, Brewster. 02016. “Library of the Future.” University of California Berkeley, Morrison Library, March 3. https://web.archive.org/web/20240423001315/https://bcnm.berkeley.edu/events/109/special-events/1004/library-of-the-future.

Kelly, Kevin, Alexander Rose, and Laura Welcher. “Disk Essays.” The Rosetta Project. https://web.archive.org/web/20240422235700/https://rosettaproject.org/disk/essays/.

Kelly, Kevin. 02008. “Very Long-Term Backup.” The Long Now Foundation. August 20. https://web.archive.org/web/20240423000131/https://longnow.org/ideas/very-long term-backup/.

The Long Now Foundation. “Time and Bits: Managing Digital Continuity.” 01998. February 8.https://web.archive.org/web/20240423001231/https://longnow.org/events/01998/feb/08/time-and-bits/.

MacLean, Margaret G. H., Ben H. Davis, Getty Conservation Institute, Getty Information Institute, and Long Now Foundation, eds. 01998. “Time & Bits: Managing Digital Continuity.” [Los Angeles: J. Paul Getty Trust].

Momméja, Julie. 02021. “Du Whole Earth Catalog à la Long Now Foundation dans la Baie de San Francisco : Co-Évolution sur la “Frontière” Créative (1955–2020).” Paris: Paris 3 – Sorbonne Nouvelle. https://theses.fr/2021PA030027.

Musiani, Francesca, Camille Paloque-Bergès, Valérie Schafer, and Benjamin Thierry. 02019. “Qu’est-ce qu’une archive du Web?” https://books.openedition.org/oep/8713/.

The Rosetta Project. n.d. “Disk – Concept.” The Rosetta Project. https://web.archive.org/web/20240423002348/https://rosettaproject.org/disk/concept/.

———. n.d. “The Rosetta Blog.” The Rosetta Project. https://web.archive.org/web/20240423002731/https://rosettaproject.org/blog/.

———. n.d. “The Rosetta Project, A Long Now Foundation Library of Human Language.” The Rosetta Project. https://web.archive.org/web/20240423003014/https://rosettaproject.org/.

Schafer, Valérie. 02012. “Internet, Un Objet Patrimonial et Muséographique.” Colloque Projet pour un musée informatique et de la société numérique, Musée des arts et métiers, Paris. https://web.archive.org/web/20240423012521/http://minf.cnam.fr/PapiersVerifies/7.3_internet_objet_patrimonial_Schafer.pdf.

Severo, Marta, and Olivier Thuillas. 02020. “Plates-formes collaboratives : la nouvelle ère de la participation culturelle ?” Nectart 11 (2). Toulouse: Éditions de l’Attribut: 120– 31. https://web.archive.org/web/20240423003238/https://www.cairn.info/revue-nectart 2020-2-page-120.htm.

Turner, Fred. 02006. From Counterculture to Cyberculture: Stewart Brand, the Whole Earth Network, and the Rise of Digital Utopianism. Chicago: University of Chicago Press.

UNESCO. 02004. “Records of the General Conference, 32nd Session, Paris, 29 September to 17 October 02003, v. 1: Resolutions.” UNESCO. General Conference, 32nd,0 2003 [36221]. https://web.archive.org/web/20240423004242/https://unesdoc.unesco.org/ark:/48223/pf 0000133171.page=81.

_

"Time, bits, and nickel: Managing digital and analog continuity" was originally published in Exploring the Archived Web during a Highly Transformative Age: Proceedings of the 5th international RESAW conference, Marseille, June 02023 (Ed. by Sophie Gebeil & Jean-Christophe Peyssard.) Licensed under CC-BY-4.0.

Updated my timezone tool.

Updated my timezone tool.

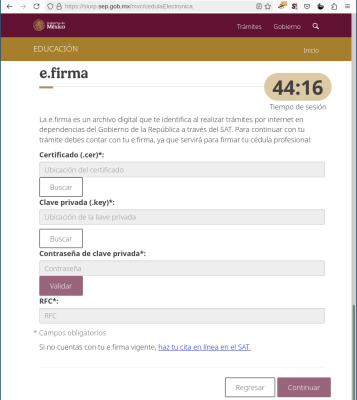

Single signon is a pretty vital part of modern enterprise security. You have users who need access to a bewildering array of services, and you want to be able to avoid the fallout of one of those services being compromised and your users having to change their passwords everywhere (because they're clearly going to be using the same password everywhere), or you want to be able to enforce some reasonable MFA policy without needing to configure it in 300 different places, or you want to be able to disable all user access in one place when someone leaves the company, or, well, all of the above. There's any number of providers for this, ranging from it being integrated with a more general app service platform (eg, Microsoft or Google) or a third party vendor (Okta, Ping, any number of bizarre companies). And, in general, they'll offer a straightforward mechanism to either issue OIDC tokens or manage SAML login flows, requiring users present whatever set of authentication mechanisms you've configured.

Single signon is a pretty vital part of modern enterprise security. You have users who need access to a bewildering array of services, and you want to be able to avoid the fallout of one of those services being compromised and your users having to change their passwords everywhere (because they're clearly going to be using the same password everywhere), or you want to be able to enforce some reasonable MFA policy without needing to configure it in 300 different places, or you want to be able to disable all user access in one place when someone leaves the company, or, well, all of the above. There's any number of providers for this, ranging from it being integrated with a more general app service platform (eg, Microsoft or Google) or a third party vendor (Okta, Ping, any number of bizarre companies). And, in general, they'll offer a straightforward mechanism to either issue OIDC tokens or manage SAML login flows, requiring users present whatever set of authentication mechanisms you've configured.