Midweek, I'll refrain from politics... or the things that are now obvious.

I just finished writing/editing/formatting an entire nonfiction book (about AI!) and wish to celebrate by offering a gift to you all. A little tale of optimism and hope... illustrating that one person -- not a superhero or mighty warrior or politician or genius -- might make all the difference in the world. With courage, hard work and neighborly good will.*

This story is one of many that can also be found in The Best of David Brin.

=========================================

A Professor at Harvard

By David Brin

Dear Lilly,

This transcription may be a bit rough. I’m dashing it off quickly for reasons that should soon be obvious.

Exciting news! Still, let me ask that you please don’t speak of this, or let it leak till I’ve had a chance to put my findings in a more academic format.

Since May of 2022, I’ve been engaged to catalogue the Thomas Kuiper Collection, which Harvard acquired in that notorious bidding war a couple of years ago, on eBay. The acclaimed astronomer-philosopher had been amassing trunkloads of documents from the late Sixteenth and early Seventeenth Centuries -- individually and in batches -- with no apparent pattern, rhyme or reason. Accounts of the Dutch Revolution. Letters from Johannes Kepler. Sailing manifests of ports in southern England. Ledgers and correspondence from the Italian Inquisition. Early documents of Massachusetts Bay Colony and narratives about the establishment of Harvard College.

The last category was what most interested the trustees, so I got to work separating them from the apparent clutter. That is, it seemed clutter, an unrelated jumble... till intriguing patterns began to emerge.

Let me trace the story as was revealed to me, in bits and pieces. It begins with the apprenticeship of a young English boy named Henry Stephens.

#

Henry was born to a family of petit-gentry farmers in Kent, during the year 1595. According to parish records, his birth merited noting as mirabilus -- he was premature and should have died of the typhus that claimed his mother. But somehow the infant survived.He arrived during a time of turmoil. Parliament had passed a law that anyone who questioned the Queen's religious supremacy, or persistently absented himself from Anglican services, should be imprisoned or banished from the country, never to return on pain of death. Henry’s father was a leader among the “puritan” dissenters in one of England’s least tolerant counties. Hence, the family was soon hurrying off to exile, departing by ship for the Dutch city of Leiden.

Leiden, you’ll recall, was already renowned for its brave resistance to the Spanish army of Philip II. As a reward, Prince William of Orange and the Dutch parliament gave the city a choice: freedom from taxes for a hundred years, or the right to establish a university. Leiden chose a university.

Here the Stephens family joined a growing expatriate community -- English dissenters, French Huguenots, Jews and others thronging into the cities of Middelburg, Leiden, and Amsterdam. Under the Union of Utrecht, Holland was the first nation to explicitly respect individual political and religious liberty and to recognize the sovereignty of the people, rather than the monarch. (Both the American and French Revolutions specifically referred to this precedent).

Henry was apparently a bright young fellow. Not only did he adjust quickly -- growing up multilingual in English, Dutch and Latin -- but he showed an early flair for practical arts like smithing and surveying.

The latter profession grew especially prominent as the Dutch transformed their landscape, sculpting it with dikes and levees, claiming vast acreage from the sea. Overcoming resistance from his traditionalist father, Henry managed to get himself apprenticed to the greatest surveyor of the time, Willebrord Snel van Leeuwen -- or Snellius. In that position, Henry would have been involved in a geodetic mapping of Holland -- the first great project using triangulation to establish firm lines of location and orientation -- using methods still applied today.

While working for Snellius, Henry apparently audited some courses offered by Willebrord’s father -- Professor Rudolphus Snellius -- at the University of Leiden. Rudolphus lectured on "Planetarum Theorica et Euclidis Elementa" and evidently was a follower of Copernicus. Meanwhile the son -- also authorized to teach astronomy -- specialized in the Almagest of Ptolemeus!

The Kuiper Collection contains a lovely little notebook, written in a fine hand -- though in rather vulgar latin -- wherein Henry Stephens describes the ongoing intellectual dispute between those two famous Dutch scholars, Snellius elder and younger. Witnessing this intellectual tussle first-hand must have been a treat for Henry, who would have known how few opportunities there were for open discourse in the world beyond Leiden.

#

But things were just getting interesting. For at the very same moment that a teenage apprentice was tracking amiable family quarrels over heliocentric versus geocentric astronomies, some nearby dutchman was busy crafting the world’s first telescope.

The actual inventor is unknown -- secrecy was a bad habit practiced by many innovators of that time. Till now, the earliest mention was in September 1608, when a man ‘from the low countries’ offered a telescope for sale at the annual Frankfurt fair. It had a convex and a concave lens, offering a magnification of seven. So, I felt a rising sense of interest when I read Henry’s excited account of the news, dated six months earlier (!) offering some clues that scholars may find worth pursuing.

Later though. Not today. For you see, I left that trail just as soon as another grew apparent. One far more exciting.

Here’s a hint: word of the new instrument, flying across Europe by personal letter, soon reached a certain person in northern Italy. Someone who, from description alone, was able to re-invent the telescope and put it to exceptionally good use.

Yes, I’m referring to the Sage of Pisa. Big G himself! And soon the whole continent was abuzz about his great discoveries -- the moons of Jupiter, lunar mountains, the phases of Venus and so on. Naturally, all of this excited old Rudolphus, while poor grumpy Willebrord muttered that it seemed presumptuous to draw cosmological conclusions from such evidence. Both Snellius patris and filio agreed, however, that it would be a good idea to send a representative south, as quickly as possible, to learn first-hand about any improvements in telescope design that could aid the practical art of surveying.

So it was that in the year 1612, at age seventeen, young Henry Stephens of Kent headed off to Italy...

...and there the documented story stops for a few years. From peripheral evidence -- bank records and such -- it would appear that small amounts were sent to Pisa from Snel family accounts in the form of a ‘stipend’. Nothing large or well-attributed, but a steady stream that lasted until about 1616, when “H.Stefuns” abruptly reappears in the employment ledger of Willebrord the surveyor.

What was Henry up to all that time? One might squint and imagine him counting pulse-beats in order to help time a pendulum’s sway. Or using his keen surveyor’s eye to track a ball’s descent along an inclined plane. Did he help to sketch Saturn’s rings? Might his hands have dropped two weights -- heavy and light -- over the rail of a leaning tower, while the master physicist stood watching below?

There is no way to tell. Not even from documents in the Kuiper Compilation.

There is, however, another item from this period that Kuiper missed, but that I found in a scan of Vatican archives. An early letter from the Italian scientist Evangelista Torricelli to someone he calls “Uncle Henri” -- whom he apparently met as a child around 1614. Oblique references are enticing. Was this “Henri” the same man with whom Torricelli would have later adventures?

Alas, the letter has passed through so many collectors’ hands over the years that its provenance unclear. We must wait some time for Torricelli to enter our story in a provable or decisive way.

#

Meanwhile, back to Henry Stephens. After his return to Leiden in 1616, there is little of significance for several years. His name appears regularly in account ledgers. Also on survey maps, now signing on his own behalf as people begin to rely ever-more on the geodetic arts he helped develop. Willibrord Snellius was by now hauling in f600 per annum and Journeyman Henry apparently earned his share.

Oh, a name very similar to Henry’s can be found on the membership rolls of the Leiden Society, a philosophical club with highly distinguished membership. The spelling is slightly different, but people were lackadaisical about such things in those days. Anyway, it’s a good guess that Henry kept up his interest in science, paying keen attention to new developments.

Then, abruptly, his world changed again.

#

Conditions had grown worse for dissenters back in England. Henry’s father, having returned home to press for concessions from James I, was rewarded with imprisonment. Finally, the King offered a deal, amnesty in exchange for a new and extreme form of exile -- participation in a fresh attempt to settle an English colony in the New World.

Of course, everyone knows about the Pilgrims, their reasons for fleeing England and setting forth on the Mayflower, imagining that they were bound for Virginia, though by chicanery and mischance they wound up instead along the New England coast above Cape Cod. All of that is standard catechism in American History One-A, offering a mythic basis for our Thanksgiving Holiday. And much of it is just plain wrong.

For one thing, the Mayflower did not first set forth from Plymouth, England. It only stopped there briefly to take on a few more colonists and supplies, having actually begun its voyage in Holland. The expatriate community was the true source of people and material.

And right there, listed among the ship’s complement, having obediently joined his father and family, you will find a stalwart young man of twenty-five -- Henry Stephens.

#

Again, details are sketchy. After a rigorous crossing oft portrayed in book and film, the Pilgrims arrived at Plymouth Rock on December 21, 1620.

Professor Kuiper hunted among colonial records and found occasional glimpses of our hero. Apparently he survived that terrible first winter and did more than his share to help the young colony endure. Relations with the local natives were crucial and Professor Kuiper scribbled a number of notes which I hope to follow-up on later. One of them suggests that Henry went west for some time to live among the Mohegan and other tribes, exploring great distances, making drawings and collecting samples of flora and fauna.

If so, we may have finally discovered the name of the “American friend” who supplied William Harvey with his famous New World Collection, the core element upon which Edmond Halley later began sketching his Theory of Evolution!

Henry’s first provable reappearance in the record comes in 1625, with his marriage to Prosper White-Moon Forest -- a name that provokes interesting speculation. There is no way to verify that his wife was a Native American woman, though subsequent township entries show eight children, only one of whom appears to have died young -- apparently a happy and productive family for the time. Certainly, any bias or hostility toward Prosper must have been quelled by respect. Her name is noted prominently among those who succored the sick during the pestilence year of 1627.

Further evidence of local esteem came in 1629 when Henry was engaged by the new Massachusetts Bay Colony as official surveyor. This led to what was heretofore his principal claim for historical notice, as architect who laid down the basic plan for Boston Town. A plan that included innovative arterial and peripheral lanes, looking far beyond the town’s rude origins. As you may know, it became a model for future urban design that would be called the New England Style.

This rapid success might have led Henry directly to a position of great stature in the growing colony, had not events brought his tenure to an abrupt end in 1631. That was the year, you’ll recall, when Roger Williams stirred up a hornet’s nest in the Bay Colony, by advocating unlimited religious tolerance -- even for Catholics, Jews and infidels.

Forced temporarily to flee Boston, Williams and his adherents established a flourishing new colony in Rhode Island -- before returning to Boston in triumph in 1634. And yes, the first township of this new colony, this center of tolerance, was surveyed and laid out by you-know-who.

#

It’s here that things take a decidedly odd turn.

Odd? That doesn’t half describe how I felt when I began to realize what happened next. Lilly, I have barely slept for the last week! Instead, I popped pills and wore electrodes in order to concentrate as a skein of connections began taking shape.

For example, I had simply assumed that Professor Kuiper’s hoard was so eclectic because of an obsessive interest in a certain period of time -- nothing more. He seemed to have grabbed things randomly! So many documents, with so little connecting tissue between them.

Take the rare and valuable first edition that many consider the centerpiece of his collection -- a rather beaten but still beautiful copy of "Dialogho Sopra I Due Massimi Sistemi Del Mondo" or “A Dialogue Concerning Two Systems Of The World.”

(This document alone helped drive the aiBay bidding war, which Harvard eventually topped because the Collection also contained many papers of local interest.)

A copy of the Dialogue! I felt awed just touching it with gloved hands. Did any other book do more to propel the birth of modern science? The debate between the Copernican and Ptolemaic astronomical systems reached its zenith within this publication, sparking a frenzy of reaction -- not all of it favorable! Responding to this implicit challenge, the Papal Palace and the Inquisition were so severe that most of Italy’s finest researchers emigrated during the decade that followed, many of them settling in Leiden and Amsterdam.

That included young Evangelista Torricelli, who by 1631 was already well-known as a rising star of physical science. Settling in Holland, Torricelli commenced rubbing elbows with friends of his “Uncle Henri” and performing experiments that would lead to invention of the barometer.

In correspondence that year, Torricelli shows deep worry about his old master, back in Pisa. Often he would use code words and initials. Obscurity was a form of protective covering in those days and he did not want to get the old man in even worse trouble. It would do no good for “G” to be seen as a martyr or cause celebre in Protestant lands up north. That might only antagonize the Inquisition even further.

Still, Torricelli’s sense of despond grew evident as he wrote to friends all over Europe, passing on word of the crime being committed against his old master. Without naming names, Torricelli described the imprisonment of a great and brilliant man. Threats of torture, the coerced abjuration of his life’s work... and then even worse torment as the gray-bearded Professori entered confinement under house arrest, forbidden ever to leave his home or stroll the lanes and hills, or even to correspond (except clandestinely) with other lively minds.

#

What does all of this have to do with that copy of "Dialogho” in the Kuiper Collection?

Like many books that are centuries old, this one has accumulated a morass of margin notes and annotations, scribbled by various owners over the years -- some of them cogent glosses upon the elegant mathematical and physical arguments, and others written by perplexed or skeptical or hostile readers. But one large note especially caught my eye. Latin words on the flyleaf, penned in a flowing hand. Words that translate as:

To the designer of Providence.

Come soon, deliverance of our father.

All previous scholars who examined this particular copy of "Dialogho” have assumed that the inscription on the flyleaf was simply a benediction or dedication to the Almighty, though in rather unconventional form.

No one knew what to make of the signature, consisting of two large letters.

ET.

#

Can you see where I’m heading with this?

Struck by a sudden suspicion, I arranged for Kuiper’s edition of "Dialogho” to be examined by the Archaeology Department, where special interest soon focused on dried botanical materials embedded at the tight joining of numerous pages. All sorts of debris can settle into any book that endures four centuries. But lately, instead of just brushing it away, people have begun studying this material. Imagine my excitement when the report came in -- pollen, seeds and stem residue from an array of plant types... nearly all of them native to New England!

It occurred to me that the phrase “designer of Providence” might not -- in this case -- have solely a religious import!

Could it be a coded salutation to an architectural surveyor? One who established the street plan of the capital of Rhode Island?

Might “father” in this case refer not to the Almighty, but instead to somebody far more temporal and immediate -- the way two apprentices refer to their beloved master?

What I can verify from the open record is this. Soon after helping Roger Williams return to Boston in triumph, Henry Stephens hastily took his leave of America and his family, departing on a vessel bound for Holland.

#

Why that particular moment? It should have been an exciting time for such a fellow. The foundations for a whole new civilization were being laid. Who can doubt that Henry took an important part in early discussions with Williams, Winthrop, Anne Hutchinson and others -- deliberations over the best way to establish tolerance and lasting peace with native tribes. How to institute better systems of justice and education. Discussions that would soon bear surprising fruit.

And yet, just as the fruit was ripening, Stephens left, hurrying back to a Europe that he now considered decadent and corrupt. What provoked this sudden flight from his cherished New World?

It was July, 1634. Antwerp shipping records show him disembarking there on the 5th.

On the 20th a vague notation in the Town Hall archive tells of a meeting between several guildmasters and a group of ‘foreign doctors’ -- a term that could apply to any group of educated people from beyond the city walls. Only the timing seems provocative.

In early August, the Maritime Bank recorded a large withdrawal of 250 florins from the account of Willebrord Snellius, authorized in payment to ‘H. Stefuns’ by letter of credit from Leiden.

Travel expenses? Plus some extra for clandestine bribes? Yes, the clues are slim even for speculating. And yet we also know that at this time the young exiled scholar, Evangelista Torricelli, vacated his home. Bidding farewell to his local patrons, he then mysteriously vanished from sight forever.

So, temporarily, did Henry Stephens. For almost a year there is no sign of either man. No letters. No known mention of anyone seeing them...

...not until the spring of 1635, when Henry stepped once more upon the wharf in Boston Town, into the waiting arms of Prosper and their children. Sons and daughters who presumably clamored around their Papa, shouting the age-old refrain --

“What did you bring me? What did you bring me?”

What he brought them was the future.

#

Oops, sorry about that, Lilly. You must be chafing for me to get to the point.

Or did you cheat?

Have you already done a quick mentat-scan of the archives, skipping past Henry’s name on the Gravenhage ship manifest, looking to see who else disembarked along with him that bright April day?

No, it won’t be that obvious. They were afraid, you see, and with good reason.

True, the Holy See quickly forgave the fugitive and declared him safe from retribution. But the secretive masters of the Inquisition were less eager to pardon a famous escapee. They had already proved relentless in pursuit of those who slip away. While pretending that he still languished in custody, they must have sent agents everywhere, searching...

So, look instead for assumed names! Protective camouflage.

Try Mr. Quicksilver, which was the common word in English for mercury, a metal that is liquid at room temperature and a key ingredient in early barometers. Is the name familiar? It would be if you went to this university. And now it’s plain -- that had to be Torricelli! A flood of scholarly papers may come from this connection, alone. An old mystery solved.

But move on now to the real news. Have you scanned the passenger list carefully?

How about “Mr. Kinneret”?

Kinneret -- one of the alternate names, in Hebrew, for the Sea of Galilee.

#

Yes, dear. Kinneret.

I’m looking at his portrait right now, on the Wall of Founders. And despite obvious efforts at disguise -- no beard, for example -- it astonishes me that no one has commented till now on the resemblance between Harvard’s earliest Professor of Natural Philosophy and the scholar who we are told died quietly under house arrest in Pisa, way back in 1642.

It makes you wonder. Would a Catholic savant from “papist” Italy have been welcome in Puritan Boston -- or on the faculty of John Harvard’s new college -- without the quiet revolution of reason that Roger Williams set in motion?

Would that revolution have been so profound or successful, without strong support from the Surveyor’s Guild and the Seven United Tribes?

Lacking the influence of Kinneret, might the American tradition of excellence in mathematics and science have been delayed for decades? Maybe centuries?

#

Sitting here in the Harvard University Library, staring out the window at rowers on the river, I can scarcely believe that less than four centuries have passed since the Gravenhage docked not far from here on that chilly spring morning of 1635. Three hundred and sixty-seven years ago, to be exact.

Is that all? Think about it, Lilly, just fifteen human generations, from those rustic beginnings to the dawn of a new millennium. How the world has changed.

Ill-disciplined, I left my transcriber set to record Surface Thoughts, and so these personal musings have all been logged for you to savor, if you choose high-fidelity download. But can even that convey the emotion I feel while marveling at the secret twists and turns of history?

If only some kind of time -- or para-time -- travel were possible, so history could become an observational... or even experimental... science! Instead, we are left to use primitive methods, piecing together clues, sniffing and burrowing in dusty records, hoping the essential story has not been completely lost.

Yearning to shed a ray of light on whatever made us who we are.

#

How much difference can one person make, I wonder? Even one gifted with talent and goodness and skill -- and the indomitable will to persevere?

Maybe some group other than the Iroquois would have invented the steamboat and the Continental Train, even if James Watt hadn’t emigrated and ‘gone native’. But how ever could the Pan American Covenant have succeeded without Ben Franklin sitting there in Havana, to jest and soothe all the bickering delegates into signing?

How important was Abraham Lincoln’s Johannesburg Address in rousing the world to finish off slavery and apartheid? Might the flagging struggle have failed without him? Or is progress really a team effort, the way Kip Thorne credits his colleagues -- meta-Einstein and meta-Feynman -- claiming that he never could have created the Transfer Drive without their help?

Even this fine Widener Library where I sit -- bequeathed to Harvard by one of the alumni who died when Titanic hit that asteroid in 1912 -- seems to support the notion that things will happen pretty much the same, whether or not a specific individual or group happens to be on the scene.

#

No one can answer these questions. My own recent discoveries -- following a path blazed by Kuiper and others -- don’t change things very much. Except perhaps to offer a sense of satisfaction -- much like the gratification Henry Stephens must have felt the day he stepped down the wharf, embracing his family, shaking the hand of his friend Williams, and breathing the heady air of freedom in this new world...

... then turning to introduce his friends from across the sea. Friends who would do epochal things during the following twenty years, becoming legends while Henry himself faded into contented obscurity.

Can one person change the world?

Maybe not.

So instead let’s ask; what would Harvard be like, if not for Quicksilver-Torricelli?

Or if not for Professor Galileo Galilei.

### ###

### ###

Addendum in 2026. Sure, optimism can be a hard to come by right now. Especially as the Confederacy - having captured the American capital in this latest phase of the 240 year Civil War - is expressing its classic manias, seemingly determined to take this where it always ends. At Yorktown. At Appomattox.

Certainly I'll not gloat as scores of sage pundits and pols admit - at long last - what I've said for a decade. That it's been blackmail, all along.

Not just because of what's been revealed (so far) in the Partial/redacted Epstein Files. But because only coercion can explain the uniformity of craven inaction by those cowards who won't step up for their country, for justice, for sanity... or for their children. Not dogma or ideology or graft... none of the classic diagnoses can explain why even just TEN haven't stepped across the aisle in the House, to rejoin America. To wipe that smirk off Mike Johnson's so-brown nose.

Replay the SOTU and look at that side, see the desperation to express placating obeisance for their master. And underneath... the fear.

One, even just one could make a difference...

....as in the story that I oppered you today. But let it inspire you if just a little.

Persevere.

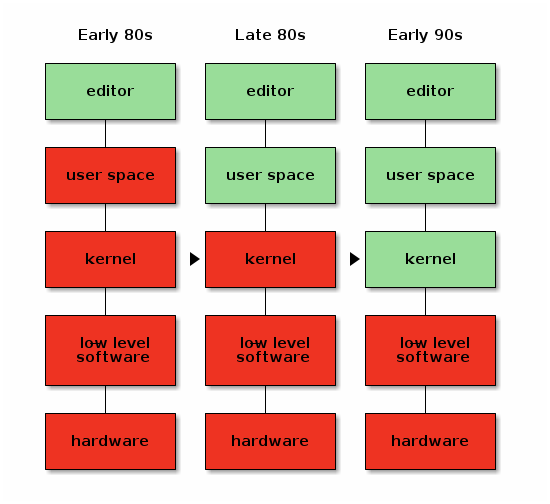

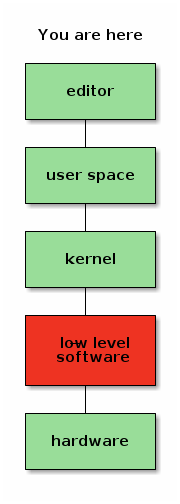

. I’m Hellen Chemtai, an intern at Outreachy working with the Debian OpenQA team on Images Testing. This is the final week of the internship. This is just a start for me as I will continue contributing to the community .I am grateful for the opportunity to work with the Debian OpenQA team as an Outreachy intern. I have had the best welcoming team to Open Source.

. I’m Hellen Chemtai, an intern at Outreachy working with the Debian OpenQA team on Images Testing. This is the final week of the internship. This is just a start for me as I will continue contributing to the community .I am grateful for the opportunity to work with the Debian OpenQA team as an Outreachy intern. I have had the best welcoming team to Open Source.

The next Debconf happens in Japan. Great news. Feels like we came a long way, but I didn't personally do much, I just made the first moves.

The next Debconf happens in Japan. Great news. Feels like we came a long way, but I didn't personally do much, I just made the first moves.

The conceptual model from our paper, visualizing possible institutional configurations among Wikipedia projects that affect the risk of governance capture.

The conceptual model from our paper, visualizing possible institutional configurations among Wikipedia projects that affect the risk of governance capture.

has featured the

has featured the